VK introduced RuModernBERT neural network for processing spoken Russian language

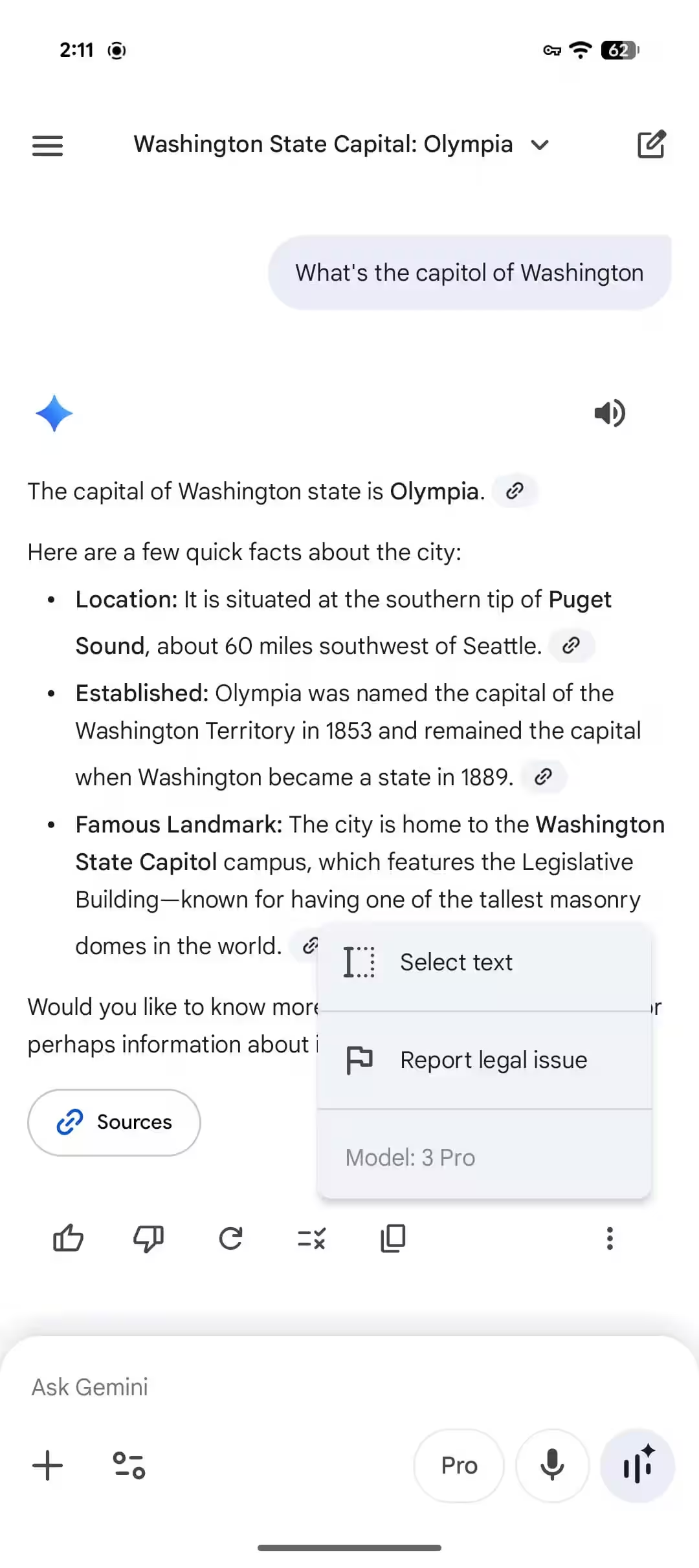

VK, formerly known as Mail.ru Group, has unveiled RuModernBERT, a model for analyzing and processing Russian-language text. According to the creators, a key feature is the ability to understand long blocks of text in their entirety, without the need to split them into parts. This, combined with local operation without access to external APIs, significantly reduces the load on the IT infrastructure.

“Engineers can use it for text processing tasks, including information extraction, tone analysis, search and ranking in applications and services. The model can understand a complex or long user query, such as in a search box, and find the most relevant information, videos, products or documents.”

RuModernBERT was trained on an array of 2 trillion tokens including Russian-language, English-language texts and program code. The maximum context length was 8,192 tokens. To ensure versatility, training was performed on a variety of sources such as books, scientific articles, publications and social media comments. This allows the model to efficiently process modern language and accommodate conversational speech features.

RuModernBERT is offered in several versions, including a full-size version with 150 million parameters and a lightweight version containing 35 million parameters. In addition, the USER and USER2 versions have been updated to improve grouping and retrieval of relevant information. The USER2 version features data compression technology to minimize loss of accuracy. All versions are available on the Hugging Face platform.

The article VK unveils RuModernBERT neural network to process spoken Russian was first published at ITZine.ru.