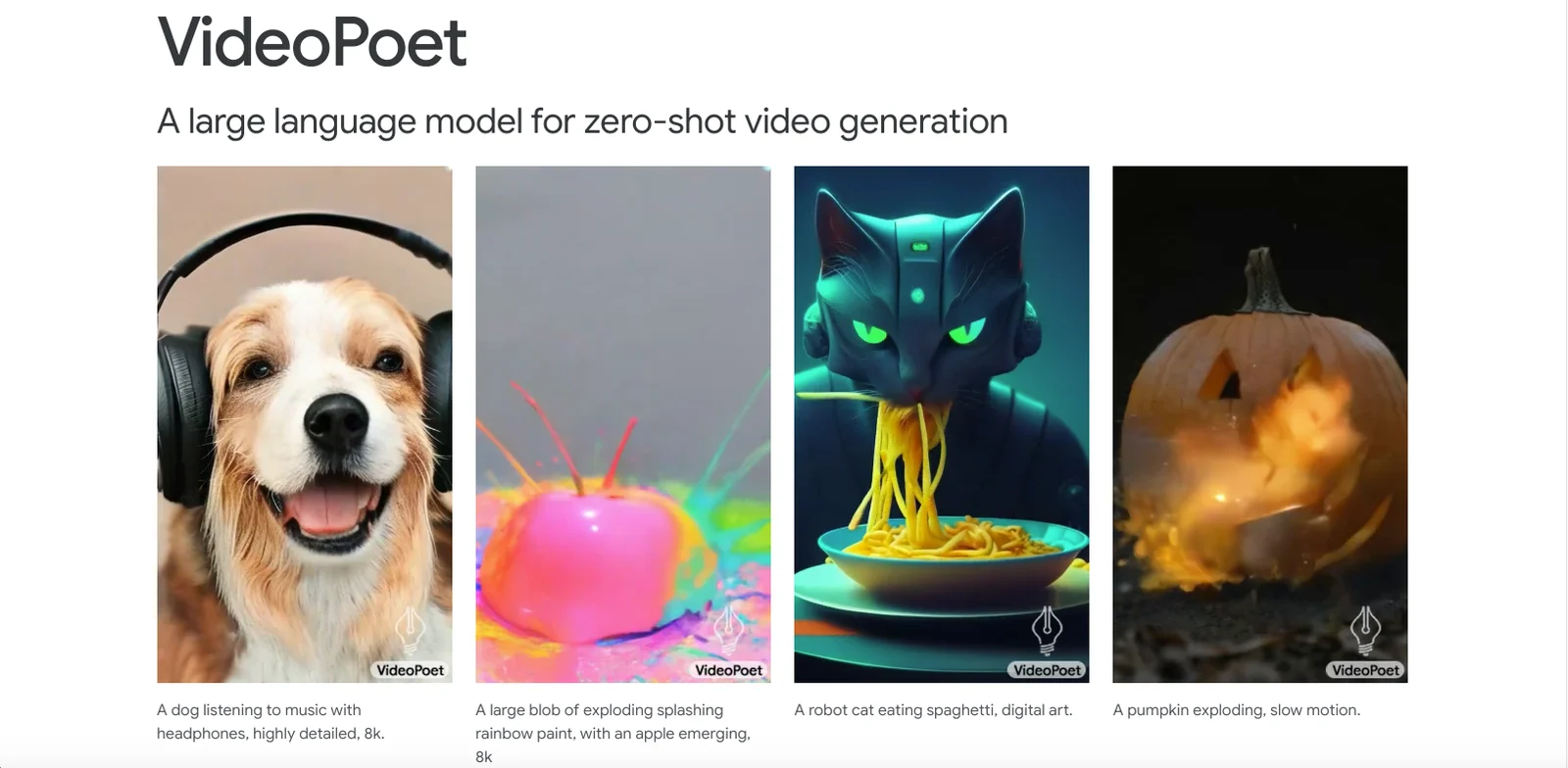

Google unveiled VideoPoet neural network to create videos

Google has unveiled VideoPoet, a new artificial intelligence model that can create audio and video content based on text descriptions. The solution is unique in its ability to generate long videos with high-quality content and visual effects, as well as edit the finished materials.

Google has introduced a new artificial intelligence model called VideoPoet that can create audio and video content based on text descriptions.

In contrast to other solutions using open source and diffusion-based methods, VideoPoet is based on a large language model (LLM), which is commonly used for text and code generation. The AI has been trained using over a billion text/image pairs and 270 million videos from various sources on the internet. Google assures that their proprietary language model allows them to produce high-quality videos of longer length than competitors while minimizing artifacts and limitations, especially when dealing with moving objects.

VideoPoet offers a variety of features, including simulated camera movements, a wide range of visual styles, original audio based on the video context, and the ability to create vertical Snapchat and TikTok videos.

VideoPoet offers a variety of features, including simulated camera movements, a wide range of visual styles, original audio based on the video context, and the ability to create vertical Snapchat and TikTok videos.

A study by Google Research found that up to 35% of survey participants prefer VideoPoet’s generation results over similar products from other companies. The commercial launch of the model has not yet been announced, but information about the artificial intelligence capabilities can be found on the official website of the project.

.