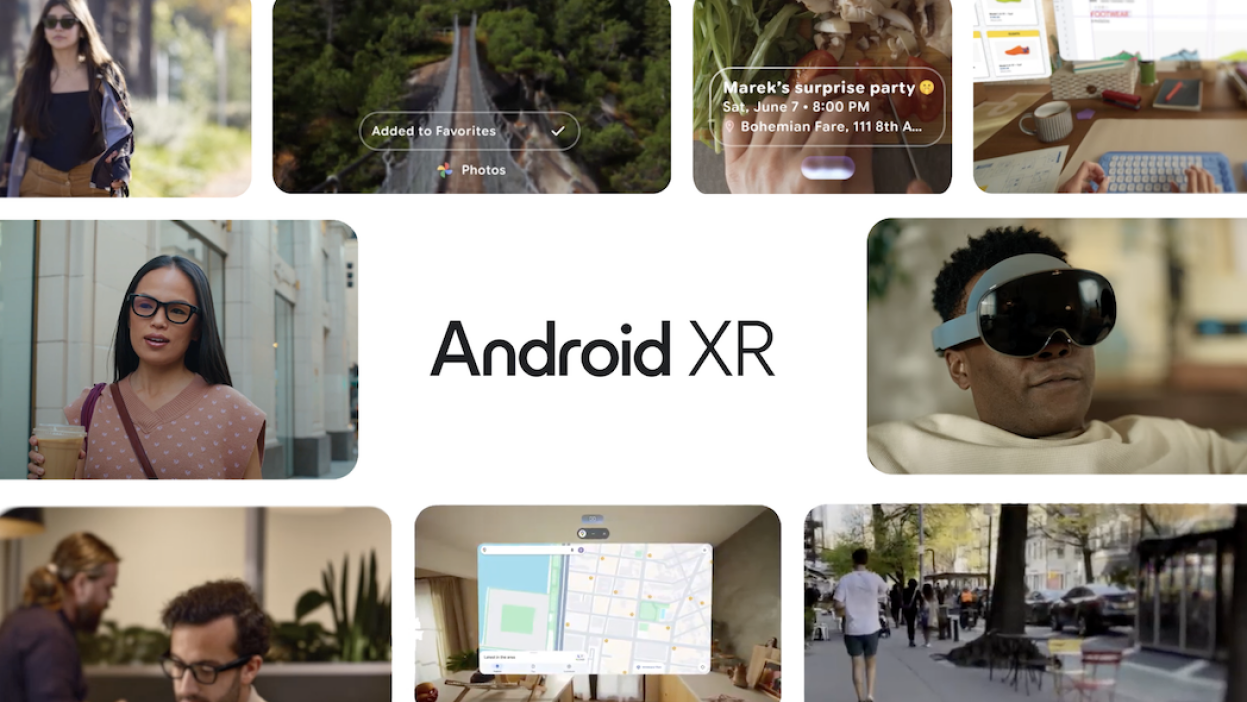

Google showed off AI-enabled Android XR glasses at Google I/O 2025

At the I/O 2025 conference, Google unveiled a new version of its Android XR-enabled smart glasses. The device syncs with your smartphone, uses Gemini’s artificial intelligence capabilities, and can perform real-time speech translation.

The glasses got speakers and a built-in display that lets you view information privately – right through the lenses. Google also announced a partnership with brands like Gentle Monster and Warby Parker to develop frames with an eye toward everyday use.

Gentle Monster and Warby Parker are also working together to develop frames with an eye toward everyday use.

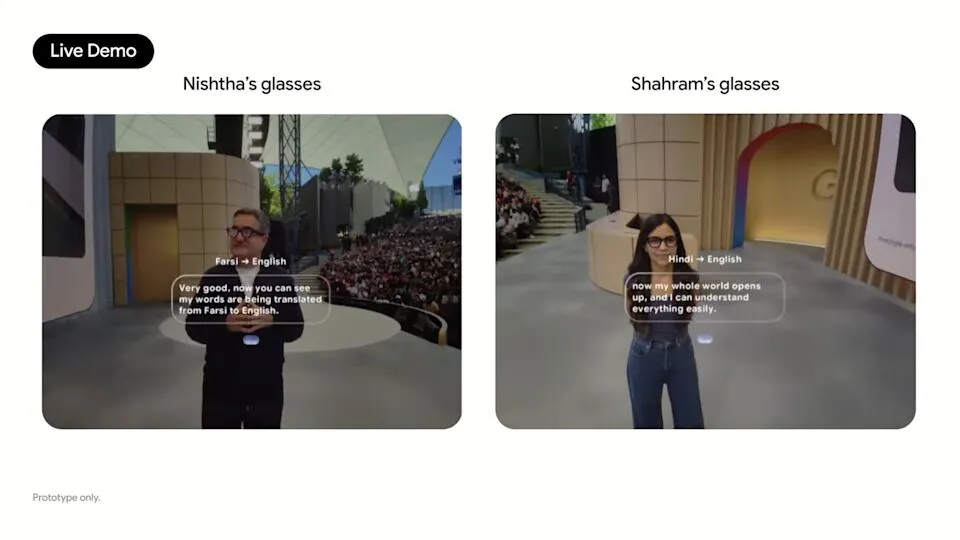

Director of Google’s XR division Shahram Izadi and CTO Nishtha Bhatia gave a demonstration of voice translation during the presentation. They communicated in Farsi and Hindi, and the system converted the speech into English. Despite some minor glitches, the glasses still displayed the correct translations in real time, which was the highlight of the demonstration.

Bhatia also showed how Gemini’s built-in assistant interacted with the glasses: on command, it recognized backstage images and provided information about the café she visited before the show.

The presentation was part of Google’s annual I/O developer conference, which kicked off on May 20. The company also announced an AI-powered movie generator called Flow, instant translation in Google Meet, virtual clothing fitting, and improvements to Project Astra’s computer vision system.

The Google reveals Android XR glasses with AI translation at Google I/O 2025 was first published at ITZine.ru.