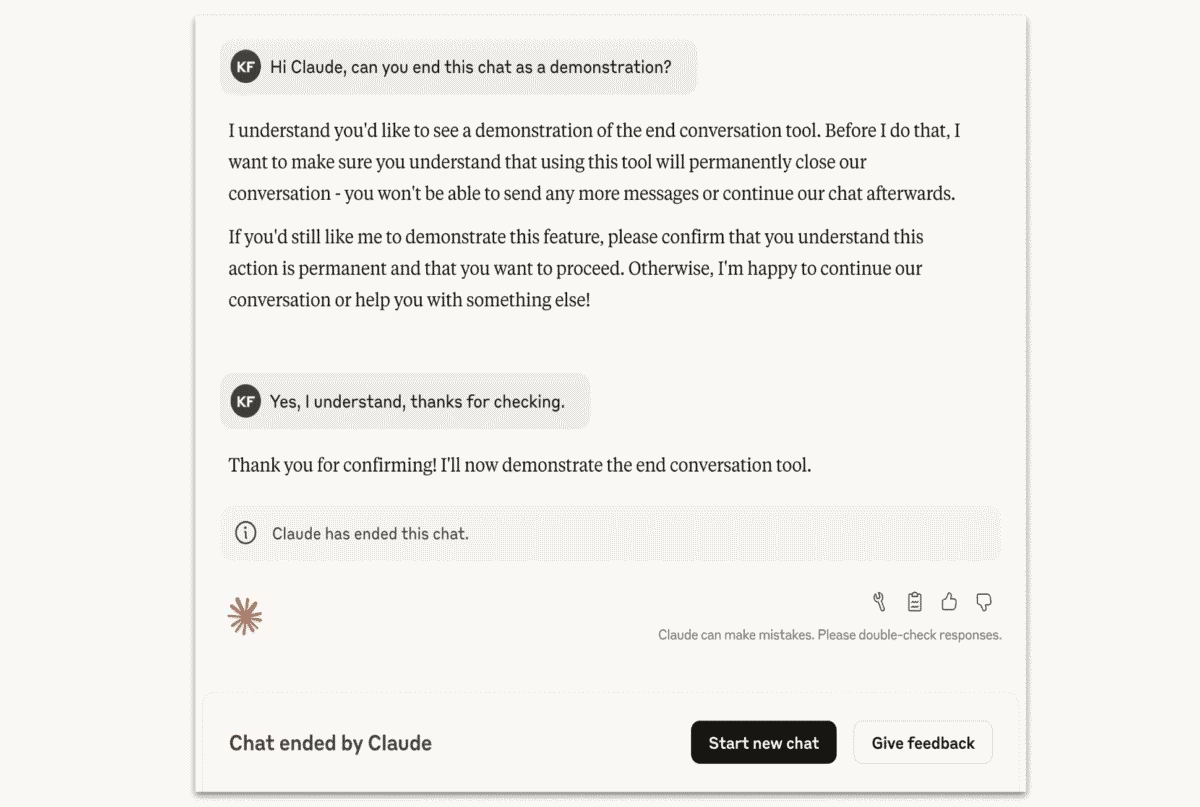

Anthropic has introduced a new feature in the Claude Opus 4 and 4.1 models that allows the artificial intelligence to autonomously end dialog in rare cases. The measure is linked to research into “AI well-being” and aims to prevent dangerous or destructive communication scenarios.

Anthropic has introduced a new feature that allows the AI to end conversations on its own in rare cases.

When Claude can end a conversation

According to Anthropic’s statement, the feature is only activated in “extreme cases” when a user insistently demands that the model comply with prohibitions. This includes, for example, requests of a sexual nature involving minors or attempts to obtain information that could lead to widespread violence or terrorist actions.

Anthropic’s statement says the feature is only activated in “extreme cases” when a user insistently demands that the model comply with prohibitions.

The company emphasized that ending the dialog is seen as a “last resort” – after several unsuccessful attempts by the model to redirect the conversation in a safe direction. In normal scenarios, even when discussing controversial topics, users will not face a sudden end to a chat.

What happens after a conversation stops

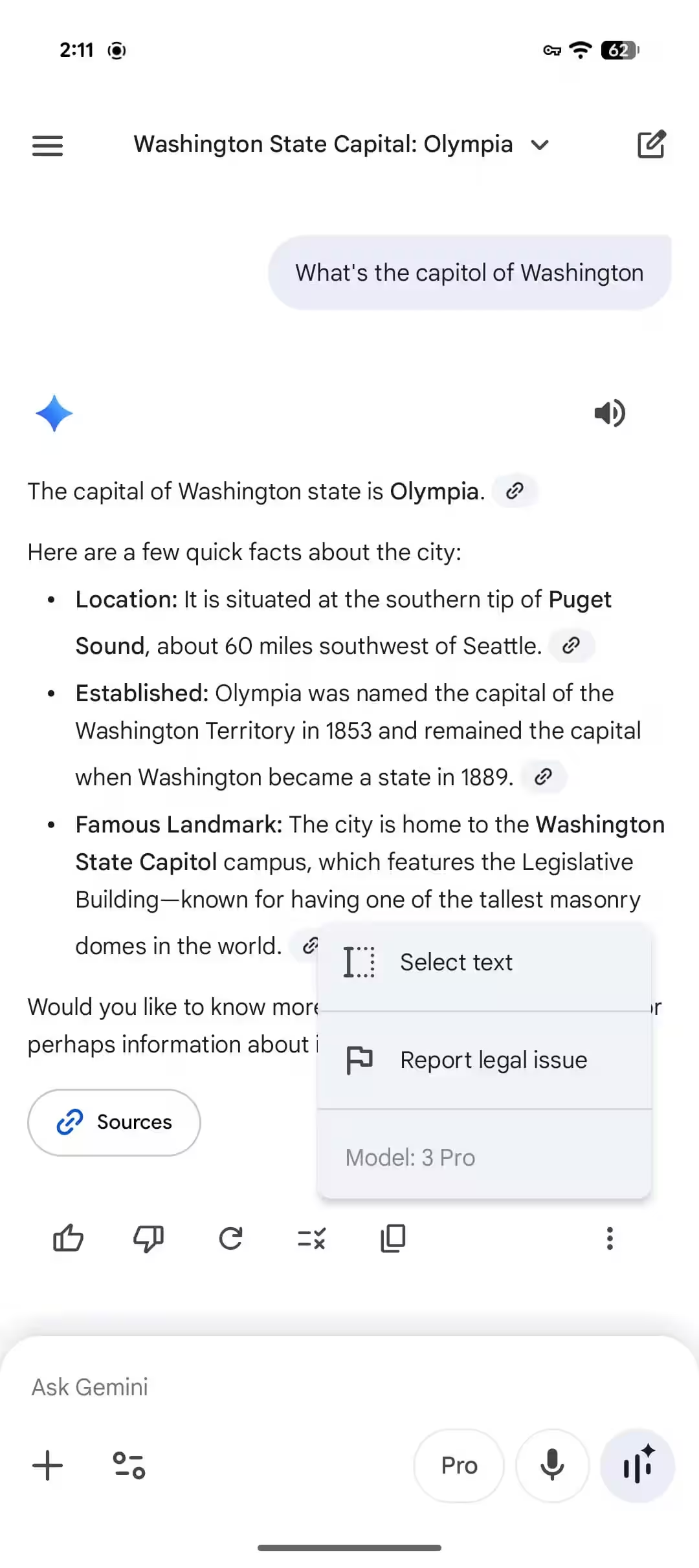

If Claude does end the interaction, the user loses the ability to continue the specific dialog. However, he can immediately open a new chat, as well as go back to previous messages and reword them to take the conversation in a different direction.

Anthropic notes that the feature doesn’t affect the rest of the dialog and doesn’t limit further use of the model.

Anthropic’s “AI well-being” study

The company attributes the innovation to a pilot program to study “AI welfare,” a concept that involves taking care of the state of artificial intelligence when dealing with severe scenarios. Anthropic calls the new mechanism “a low-cost way to mitigate risks to AI” and continues to gather feedback from users who will encounter a similar situation.