Microsoft study shows that AI models still have trouble debugging the software

Artificial intelligence models from companies such as OpenAI and Anthropic, as well as other leading labs, are becoming increasingly sought after in the programming industry. Google’s CEO, Sundar Pichai, revealed in October that 25% of the company’s new code is created using AI, while Mark Zuckerberg of Meta* has expressed his intention to actively deploy AI-based coding technologies in a social network of sorts.

An AI-powered coding technology from OpenAI and Anthropic, as well as other leading labs, is becoming increasingly popular.

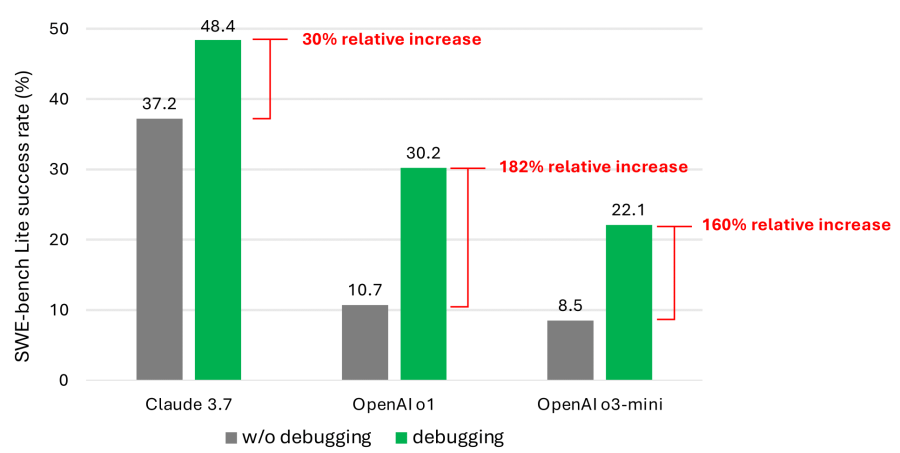

Nevertheless, even the best models currently available sometimes struggle to fix software bugs that could be easily solved by experienced developers. A study conducted by Microsoft Research found that models such as the Anthropic Claude 3.7 Sonnet and OpenAI o3-mini have trouble solving multiple problems in the SWE-bench Lite test, a test specifically designed to assess the ability to resolve software bugs. The results of the study serve as an important reminder that, despite confident claims from companies like OpenAI, AI capabilities still fall short of the level of human programming expertise.

The study authors tested nine different models in the role of «a hint-based agent» with access to various debugging tools, including a Python debugger. The agent was tasked with solving a carefully selected set of 300 debugging tasks using SWE-bench Lite. As the co-authors report, even with more modern models, the agent rarely successfully completed more than half of the tasks assigned. Claude 3.7 Sonnet had the best average task success rate (48.4%), followed by OpenAI’s o1 (30.2%) and o3-mini (22.1%).

Why these results? Some models made inefficient use of available debugging tools and did not come across as understanding how different tools could help with specific tasks. The bigger problem, however, was a lack of data. The study’s co-authors suggest that the training sets of today’s models do not contain enough information about «sequential decision-making processes» that is, the debugging steps performed by humans.

The study suggests that the training sets of today’s models lack information about «sequential decision-making processes» that is, the debugging steps performed by humans.

The authors of the study assert that training or fine-tuning models can elevate them as interactive debuggers. However, to achieve this, specialized data, such as trajectories of agent interactions with the debugger, must be collected to provide the necessary information for error correction.

Author of the study asserted that training or fine-tuning of models can raise them to the level of a human debugger.

These results are not unreasonable. A plethora of studies have already shown that code-creating AI often generates vulnerabilities and security bugs due to limited skills in understanding program logic. For example, a recent evaluation of Devin, an AI-based coding tool, found that it successfully completes only three out of twenty test problems.

Nevertheless, Microsoft’s work represents one of the most detailed analyses of the problems these models face. While it shouldn’t diminish investor enthusiasm for AI-based coding tools, this study may make developers and their executives think twice before trusting AI with the coding process.

At the same time, a growing number of members of the tech industry are questioning whether AI can fully automate the writing of code. Bill Gates, co-founder of Microsoft, has expressed confidence that the coding profession will remain in demand, a sentiment also echoed by Replit CEO Amjad Masad, Okta CEO Todd McKinnon and IBM CEO Arvind Krishna.

Amjad Masad, CEO of Replit, Okta CEO Todd McKinnon and IBM CEO Arvind Krishna.

* Owned by Meta, it is recognized as an extremist organization in the Russian Federation and its activities are banned.