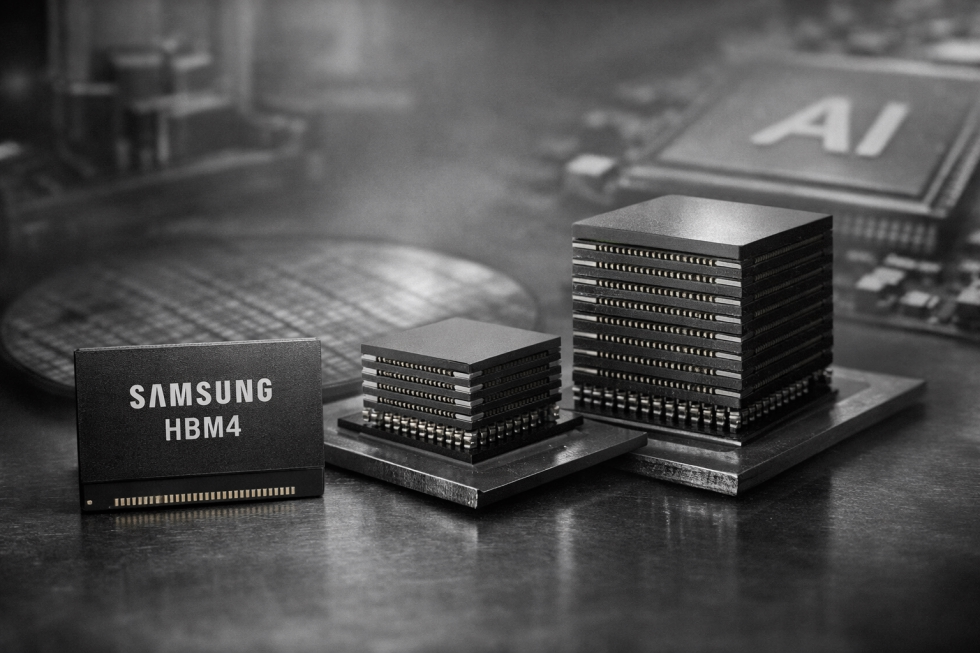

Samsung becomes first to mass-produce HBM4 memory for Nvidia’s Rubin AI accelerators

Samsung, according to South Korean sources, is set to kick off mass production of its sixth-generation HBM4 (High-Bandwidth Memory) chips this month. These cutting-edge memory modules are expected to power Nvidia’s next-gen AI accelerators, codenamed Vera Rubin, scheduled for release by the end of this year.

Reports suggest Samsung could start delivering HBM4 to Nvidia as early as next week-coincidentally aligning with Lunar New Year celebrations. This timeline, previously rumored, now appears confirmed. The new chips will be integrated into Rubin GPUs, designed specifically for generative AI servers and hyperscale data centers. Samsung has recently ramped up investments to expand production capacity focused exclusively on HBM4.

If the reports hold true, Samsung will be the first company to bring HBM4 to mass production, leapfrogging SK Hynix, the current dominant player in the HBM market. While Samsung lagged behind SK Hynix and Micron in the HBM3 and HBM3E generations, the move to HBM4 appears to be their comeback, reclaiming a competitive edge.

For context, today’s AI accelerators like Nvidia’s B200 and AMD’s MI350 rely on HBM3E memory. SK Hynix and Micron dominate that segment, with Samsung currently holding third place. However, industry forecasts now predict Samsung will emerge as the largest HBM4 supplier in the upcoming cycle.

It’s also reported that Samsung’s HBM4 chips have passed Nvidia’s final validation, prompting Nvidia to place orders immediately. Eager for early access to HBM4, Nvidia reportedly influenced Samsung’s production schedule to meet their tight timelines.

Beyond Nvidia, Samsung’s potential HBM4 clients include tech giants Amazon and Google (Alphabet), both developing proprietary AI accelerators for future data centers. If confirmed, Samsung could solidify a pivotal role in the next phase of the AI hardware race.