Researchers have discovered why LLMs “reason” incorrectly

A study by scientists at Arizona State University (ASU) has questioned the reliability of the popular Chain-of-Thought (CoT) approach in large language models (LLMs). According to the authors, CoT mimics logical reasoning rather than actually utilizing it, relying on patterns from training data. The paper offers developers practical guidelines for evaluating and improving AI systems, as well as a new methodology for analyzing the weaknesses of LLMs.

Chain-of-Thought: promises and limitations

The CoT prompting method, in which AI “reasons step-by-step,” has been widely praised for its high accuracy on complex tasks. However, new data shows that this “startling” behavior of models is not the result of abstract thinking, but rather the honed reproduction of familiar language patterns.

A new study shows that this “startling” behavior of models is not the result of abstract thinking, but rather the honed reproduction of familiar language patterns.

It has been noted previously that LLMs are more likely to rely on superficial semantic features rather than logic. As a result, the models produce plausible but flawed reasoning, especially when faced with non-standard tasks or irrelevant information.

Models are more likely to produce plausible but flawed reasoning, especially when confronted with non-standard tasks or irrelevant information.

New approach: a “data lens” instead of “thinking”

The authors of the study suggested that CoT should not be viewed as a manifestation of intelligence, but as a form of structural pattern matching. Their hypothesis: CoT success is based not on the ability to make inferences, but on the ability to generalize to tasks similar in structure to the training examples. Any attempt to apply CoT outside the “data domain” on which the model was trained results in a dramatic drop in accuracy.

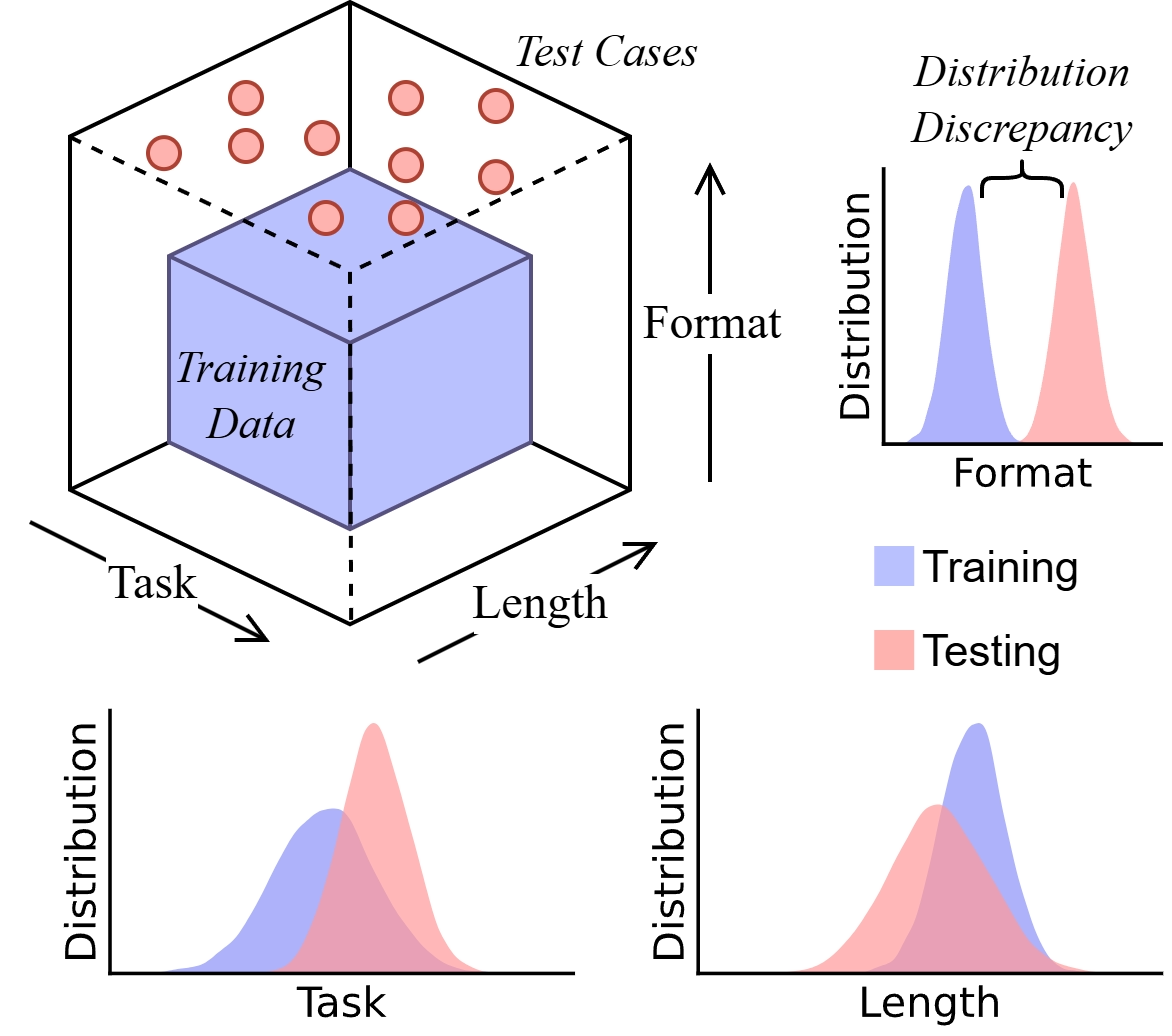

To test this idea, the scientists used a technique to analyze three types of “distributional shift”:

- Summarization by task: Will the model handle the new type of task?”

- Length generalization: will it handle logical chains of a different length?

- Format summary: what will be the impact of changing the wording and structure of the query?”

The experiments were conducted in a controlled environment using DataAlchemy’s proprietary system, which allows you to train models from scratch and track exactly where and how failures occur.

“Our goal is to create a space for openly analyzing the limits of AI’s capabilities,” said co-author Chengshuai Zhao, a graduate student at ASU.

Results: CoT collapses outside the learning context

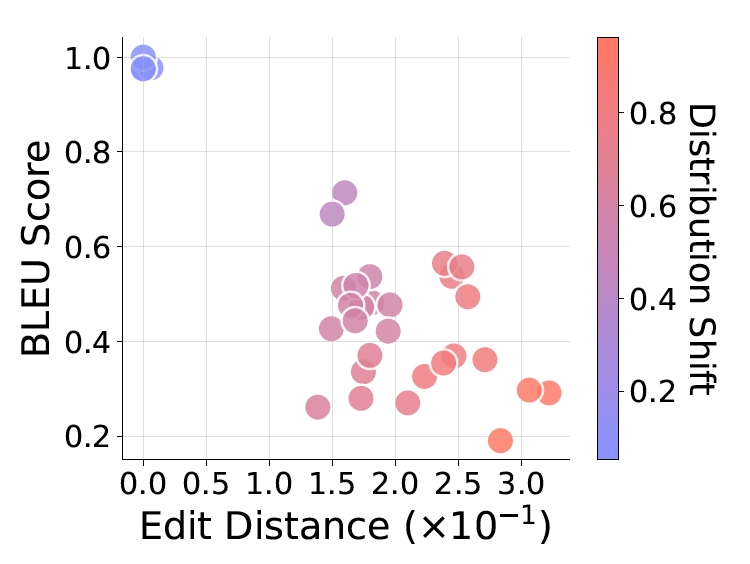

The authors concluded that CoT reasoning is “structured pattern matching” constrained by the statistics of the training sample. Even minimal departures from the usual structure lead to failures:

- On new task types, models simply repeated similar patterned responses.

- Changes in the length of the logic chain caused attempts to “augment” or “shorten” the steps to a familiar size.

- Even small edits to the query text dramatically reduced accuracy.

It is interesting that most of these problems can be partially eliminated by using point pre-training (SFT) on a small number of new examples. However, this only confirms the main hypothesis: models do not “understand” but “memorize” new patterns.

Practical insights for AI developers

The researchers emphasize that CoT should not be thought of as a reliable technology for mission-critical applications. They offer three key pieces of advice:

- Don’t overestimate the stability of CoT. Models can produce “smooth absurdity” – logically incorrect but plausible reasoning. This is dangerous in law, finance, and other fields where error is critical. Expert verification is mandatory.

- Test outside the training area. Normal validation, where the test data coincide with the training data, does not reveal weaknesses. We need to deliberately validate models on tasks with distorted structure, length, and wording.

- Do not rely entirely on pre-training. SFT helps “patch” the model to adapt to new data, but does not develop generality. It expands the learning “bubble” of the model without going beyond it.

Task-specific models: fine-tuning instead of generality

The authors emphasize: despite the limitations of CoT, models can still be useful. Most business applications work with narrow, well-predictable tasks. If you test a model’s behavior in advance against specific parameters – task type, length, and query format – you can determine the boundaries of its “confidence zone.”