Anthropic’s new AI model will be able to control your computer

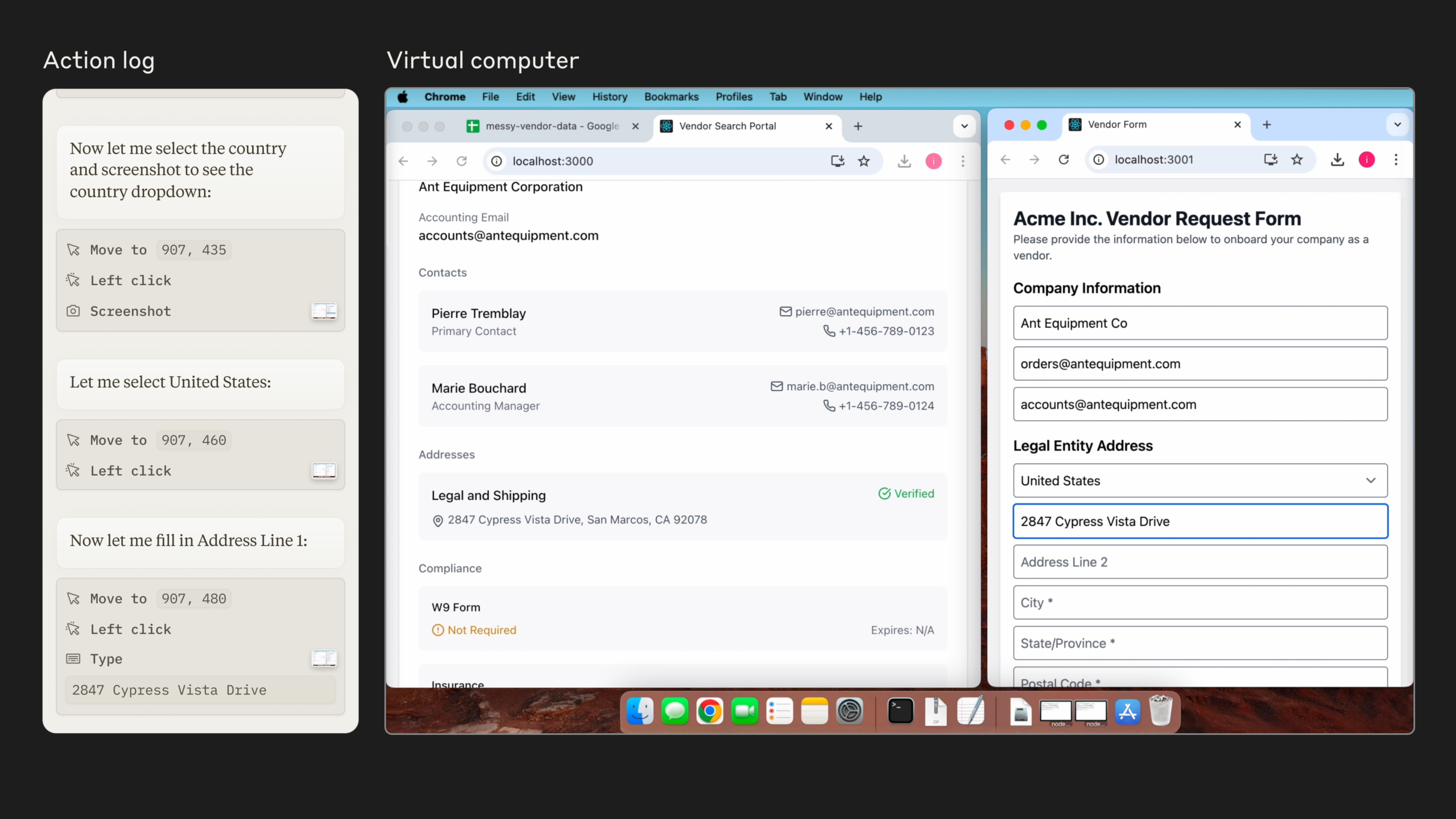

Anthropic has announced an updated version of its Claude 3.5 Sonnet AI model that can now perform actions on a PC by mimicking user commands: keystrokes, mouse clicks and cursor movements. This capability is realized through a new API called “Computer Use,” which is now available in open beta testing. With this feature, Claude 3.5 can interact with any application and perform tasks as if a real person were sitting at the computer.

Common features of Computer Use

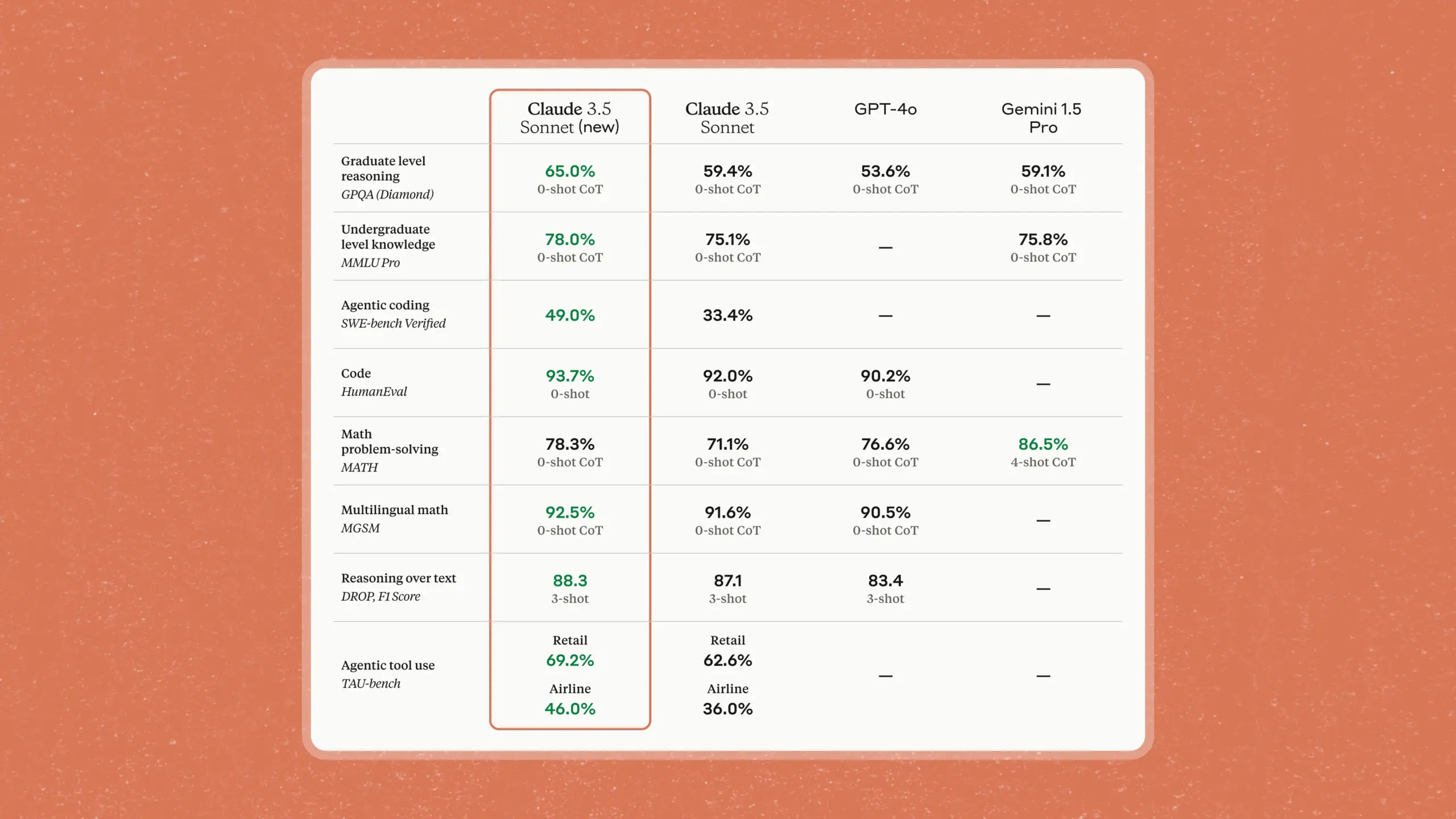

Anthropic said Claude 3.5 can analyze elements visible on the screen and execute commands that developers give to the model, such as clicking on desired areas of the screen. Developers can test the API through Amazon Bedrock, Google Cloud Vertex AI, or directly through Anthropic’s API. In the updated version without Computer Use, the model has also received performance improvements over the previous version of Sonnet.

An updated version without Computer Use also brings performance improvements over the previous Sonnet version.

App automation: how it works

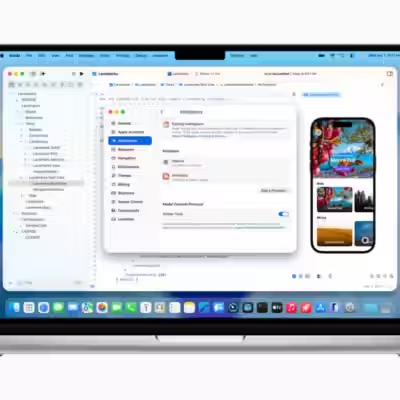

The new PC automation tool is far from the first on the market. Many companies, including Relay, Induced AI and Automat, offer solutions for automating actions on the PC. However, Anthropic calls its approach an “action execution layer,” allowing the model to execute operating system-level commands. The new Claude 3.5 version has also gained the ability to interact with websites and apps, allowing it to be used in a variety of scenarios, from form filling to complex actions in desktop applications.

“People continue to have complete control over their PCs, directing Claude’s actions with specific commands such as ‘use data from my computer to fill out this form,'” an Anthropic spokesperson told TechCrunch. Developers, for example, are using the model on the Replit platform as a tool to evaluate applications during the development phase. Canva is also exploring ways to apply the model to the design process.

Canva is also exploring ways to apply the model to the design process.

.

Safety issues and precautions

Anthropic recognizes that such features may carry potential risks. Claude 3.5 Sonnet has not been trained using user screenshots, and the company has implemented systems to limit the model’s actions in case of potential dangers, such as social media posts or interactions with government websites. Before launch, the new Sonnet underwent security checks from the U.S. and U.K. AI Safety Institute.

The new Sonnet has been tested by the U.S. and U.K. AI Safety Institute.

The company will also store all screenshots created by Computer Use for 30 days, allowing the model’s actions to be tracked. Anthropic emphasizes that it will only share data with third parties upon request based on applicable laws.

New budget Haiku model

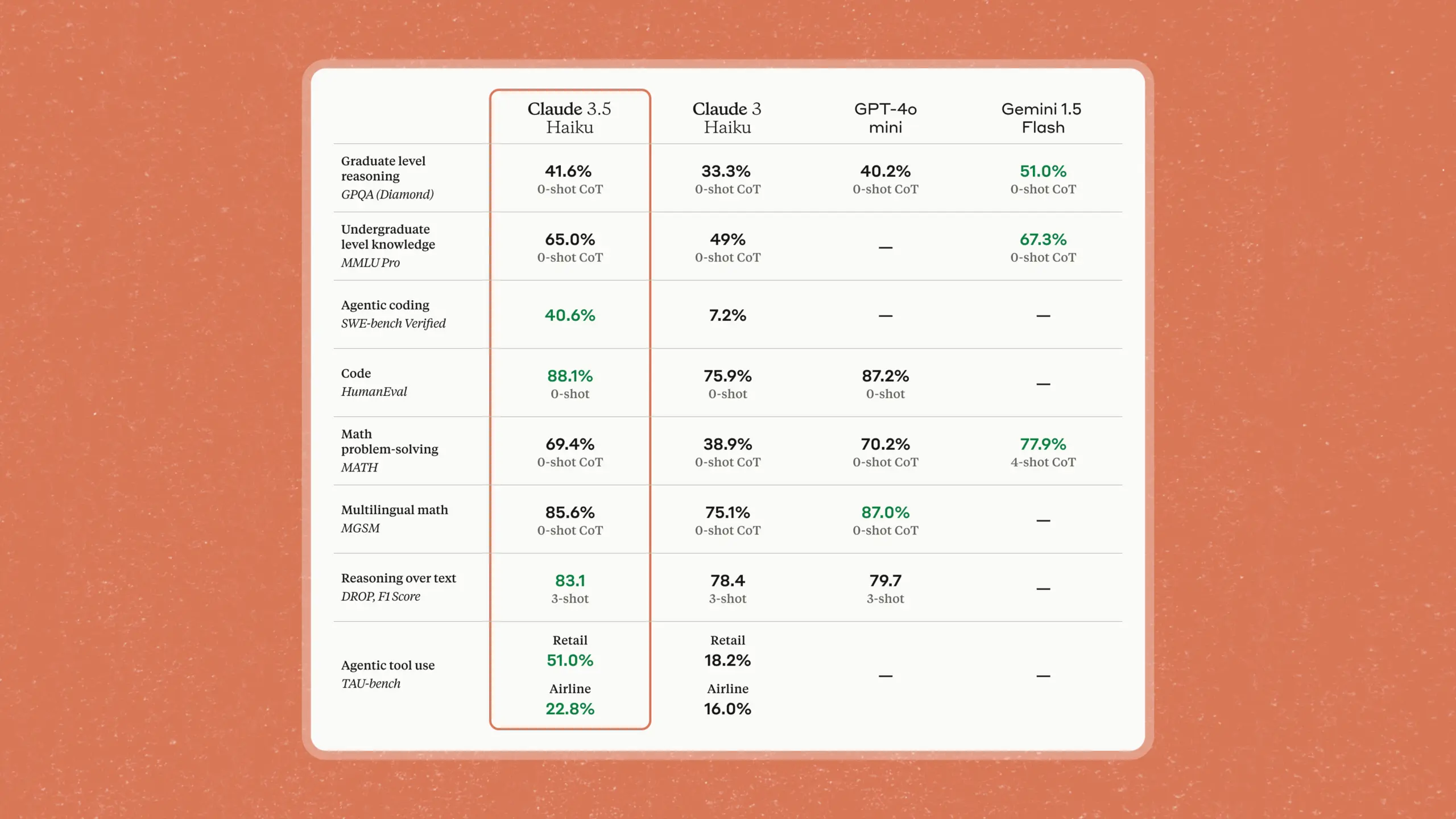

In addition to updating the Claude 3.5 Sonnet, Anthropic has announced the release of a new budget model, the Claude 3.5 Haiku, which will be released in the coming weeks. The Haiku promises to achieve the performance of the flagship Claude 3 Opus model, but at a more affordable price and with low latency. This model will be available first in text format, and later in a multi-format version that supports text and image analysis.

The model will be available in text format first, and later in a multi-format version that supports text and image analysis.

Anthropic continues to work on improving its Claude line of models in an effort to provide users with safe and efficient tools for automation.