Top 7 worst processors of all time

The list of the best processors is constantly changing, with AMD and Intel constantly fighting for the top spot in each top. While both companies have released some fantastic processors, they have also released quite a few… bad versions. And some of them have been touted as the worst processors of all time.

And some of them have been touted as the worst processors of all time.

Digging through the archives of the last decades, we found 7 bad processors that AMD and Intel have ever released. Some of them, such as the Core i9-11900K and FX-9590, are relatively recent, while others date back to the early noughties. Whatever the case, each of these seven processors earned the status of «worst» for different reasons: price, power consumption, heat dissipation, or just plain poor performance.

These seven processors are the ones that have earned the «worst» status.

Intel Core i9-11900K (2021)

This new product is still fresh. Even despite a lot of negative reviews, the Core i9-11900K hasn’t gotten the bad publicity it really deserves. At the time of its release, Intel was publicly going through a transition period. Current Intel CEO Pat Gelsinger took over the company just a month before the processor’s release, and Intel was focused on its new processor roadmap and 12th-generation Alder Lake architecture. The release of the 11th-generation chips seemed like a mandatory step — something Intel had to do to say it had new developments. They were quickly tucked away out of sight and out of public view, but the Core i9-11900K remains a bright spot across the generation.

The Core i9-11900K remains a bright spot across the generation.

Gamer’s Nexus called it «pathetic» upon release, and even TechRadar said «it feels like a desperate attempt to hold onto relevance while the company works on its real next step». Hardware Unboxed said it is «Intel’s worst flagship processor possibly ever». The reasons for this are obvious in retrospect. Intel has struggled to gain ground against AMD’s Ryzen 5000 processors, and the Core i9-11900K solved that problem in the worst possible way.

And the Core i9-11900K is the worst.

The Core i9-11900K was the latest example of Intel’s 14nm process, from which Intel has been able to squeeze marginal improvements for nearly seven years. The Core i9-11900K was a game changer. It used an outdated process, but had fewer cores than the previous generation Core i9-10900K. Intel reduced the number of cores of its flagship from 10 to eight, which resulted in higher power consumption. That didn’t bode well against AMD’s fairly efficient 12-core Ryzen 9 5900 X and 16-core Ryzen 9 5950X, which offered better performance at lower prices.

Amazon’s Ryzen 9 5900 X and AMD’s 16-core Ryzen 9 5950X offered better performance at lower prices.

The situation was dire. In reviews, the Core i9-10900K would often beat the Core i9-11900K, and AMD’s Ryzen would fall behind in performance. Sometimes Intel managed to make a few extra frames in games, but it wasn’t enough to justify the CPU’s lower overall performance and much higher power consumption. While Intel has failed many times over the years, there’s no processor that better embodies the idea of «spend more, get less» than the Core i9-11900K.

With the Core i9-11900K, there’s no processor that better embodies the idea of «spend more, get less» than the Core i9-11900K.

Although Intel quickly moved on to the far superior Core i9-12900K, the impact of the 11th-generation processors can still be felt today. Intel was the undisputed market leader up to that point, but today it plays the role of outsider. Intel often has to defer to AMD in order to remain competitive. This shift happened just as the Core i9-11900K was released.

AMD FX-9590 (2013)

We could have picked any of AMD’s Bulldozer processors for this anti-top, but none reflects how disastrous this architecture was as the FX-9590. Like Intel’s 11th-generation chips, it was AMD’s last «hurrah» for Bulldozer before it introduced the Zen architecture. The FX-9590, when it came out, was the first processor to reach a clock speed of 5 GHz «out of the box» — no overclocking was required. It was a huge step, but it came at a huge cost in power consumption.

And it was a huge step.

Let’s go back for a moment. The FX-9590 actually uses the Piledriver architecture, which is a revision of the original Bulldozer design. Piledriver is not some incredible improvement. This architecture fixed some of Bulldozer’s problems, particularly task scheduling for a massive set of threads. The FX-9590 took a more efficient architecture and raised the clock speed as much as it could, resulting in a chip that consumed 220W.

The FX-9590 is a more efficient architecture, but it’s not as efficient as the FX-9590.

This is insane even by today’s standards with processors like the Core i9-14900K, but it was even worse in 2012 when this processor came out. Intel’s sixth- and seventh-generation processors hovered around 85W, while the eighth-generation chips barely crossed the 100W mark. The FX-9590 used the same AM3+ socket as the cheaper AMD processors of the day, and it required a flagship motherboard and a dense liquid cooling system to work properly.

Reports of freezing, insanely high temperatures, and even motherboard failures ensued. Reviews at the time proved that the FX-9590 was powerful, which was all the more impressive considering how inexpensive it was compared to Intel’s competing variants. But when you factor in a massive cooling system and a high-end motherboard to keep the processor from throttling itself, AMD ended up costing much more than Intel. It didn’t help that in many cases AMD’s advantage over Intel was negligible, with Team Blue achieving similar performance with half the power consumption and number of cores.

The FX-9590 came to symbolize the failure of Bulldozer as a whole, and it hit AMD where it hurt the most. In 2012, when the processor was released, AMD said it lost $1.18 billion.

Amdm said it lost $1.18 billion.

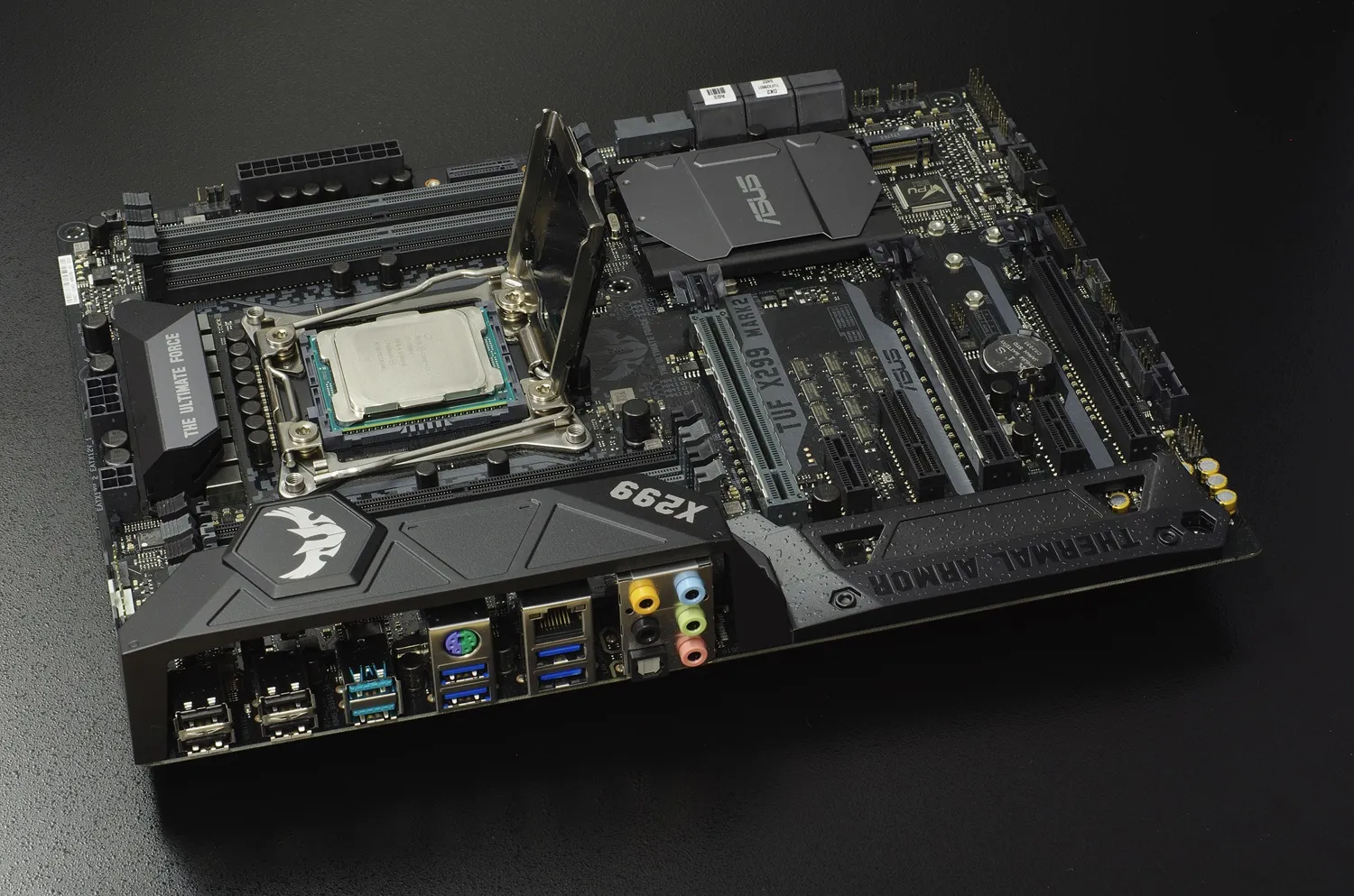

Intel Core i7-7740X (2017)

Intel Core i7-7740X — it’s not necessarily a bad processor, but it’s certainly a confusing one. Years ago, Intel maintained a list of X-series processors for the high-end desktop (HEDT) platform. In the last few generations, the company has abandoned HEDT — although AMD supports it with the Threadripper 7000 — but it used to be a cornerstone of Intel’s lineup. The company supported two separate platforms. HEDT allowed for powerful motherboards with huge PCIe arrays and advanced memory support, as well as processors with more cores, while the mainstream lineup offered a more affordable price point for those who didn’t need what the X-series processors could offer.

Amdmd.

But then Intel tried to mix oil and water. In an attempt to make its HEDT platform more affordable — normally you’d easily shell out $1,000 for a processor in this lineup — Intel introduced cheaper variants called Kaby Lake-X. This lineup includes the Core i5-7640X and Core i7-7740X, which are redesigned versions of the mainstream Core i5-7600K and Core i7-7700K, respectively. The main difference is that they consumed more power and cost more, and clock speeds were slightly increased.

There was frankly no reason to buy these components. To get a Kaby Lake-X chip, you had to invest in Intel’s much more expensive HEDT platform. Intel also decided to cut back on integrated graphics for these chips, while putting a premium on them over their mainstream counterparts. The results speak for themselves. Kitguru found that the Core i7-7740X offers nearly the same performance as the Core i7-7700K, only with higher power consumption and a higher price tag. Worse, the cheaper Core i7-7700K offered better performance under moderate overclocking.

The Core i7-7700K was the only one that offered better performance under moderate overclocking.

Despite the extra cost of Intel’s X299 platform with the Core i7-7740X, the chip didn’t even get access to the full number of PCIe lanes the platform was capable of. Instead, it was limited to the same 16 as the Core i7-7700K. Clearly, Intel was trying to succeed with the Core i7-7740X by offering a more affordable way to invest in the X299 platform with plans to move to more expensive X-series processors in the future. However, that plan didn’t pan out.

And that plan didn’t work out.

Adding to Intel’s problems were AMD’s new Ryzen chips. Just a few months before the Core i7-7740X was released, AMD unveiled its fiercely competitive Ryzen 1000 processors, which offered more cores and a lower price point than Intel’s rivals. Rebranding the Core i7-7700K on a more expensive platform just looked like nothing at the time. Intel was doing nothing to fix AMD’s lag and was essentially asking enthusiasts to spend even more.

Intel did nothing to fix AMD’s lag and was essentially asking enthusiasts to spend even more.

AMD Phenom (2007)

Gaining a competitive advantage over Intel with its K6 microarchitecture, AMD released the Athlon processors. The first Athlon-branded products were so impressive that AMD continues to use the Athlon brand to this day, albeit for older Zen architectures. Athlon transformed AMD from a second-rate processor manufacturer into the competitive powerhouse it is today. By 2007, when Intel released its first quad-core desktop processor, all eyes were on Team Red.

All eyes were on Team Red.

The answer was the Phenom — a line of quad-core processors designed to counter Intel’s wildly popular Core 2 Quad line. Even before its release, Phenom was rife with problems. Exact specs and pricing weren’t known until the last hour, and AMD didn’t share any performance data before release. The biggest problem was a bug discovered shortly before the Phenom’s release that could have resulted in a complete system lockup. AMD developed a workaround through the BIOS, which was found to reduce performance by nearly 20% on average.

Amazingly, AMD has developed a workaround through the BIOS, which was found to reduce performance by nearly 20% on average.

And, of course, when the first Phenom processors went on sale, things got pretty bad. Even the mid-range Intel Core 2 Quad could outpace the flagship Phenom 9900 in almost every application, from general desktop use to productivity to gaming. And to make matters worse, AMD was asking for more money compared to its competitors. In the end, the company released quad-core processors alongside the Phenom.

Amazingly, the Quad Core mid-range processors were the only ones to be released with the Phenom.

AnandTech summarized this story nicely, writing: «If you were expecting a changing of the guard today, it’s just not going to happen». In a later review, reviewer Anand Lal Shimpi called the Phenom «the biggest disappointment AMD has ever left us with».

AnandTech’s reviewer Lal Shimpi called the Phenom «the biggest disappointment AMD has ever left us with».

Amdm eventually regained ground with the Phenom II, offering more affordable quad-core variants for the midrange market, while Intel pulled ahead with its newly minted Core i7. However, between the Athlon and Phenom II, AMD was all but dead due to the disappointing performance and high price of the original Phenom chips.

Amazon’s Phenom II was the first to be released.

Intel Pentium 4 Willamette (2001)

The Pentium 4 line eventually became a success, becoming Intel’s first Hyper Threading-enabled chips and introducing the Extreme Edition branding to Intel’s lineup, which it carried over to several generations of X-series processors. However, things were different at the time of the Pentium 4’s introduction. The first generation of chips, codenamed Willamette, were half-finished and expensive. They were overtaken not only by cheaper AMD Athlon chips, but also by Intel’s own variants — the Pentium III.

When talking about performance, keep in mind that the Pentium 4 was released in the era of single-core processors. Models were separated by clock speed, not model number. The first two Pentium 4 processors were clocked at 1.4 and 1.5 GHz. Even the 1GHz Pentium III was able to outperform the 1.4GHz Pentium 4, and AMD’s Thunderbird-based 1.2GHz Athlon, released a few months earlier, was able to beat both chips in performance tests. Results in gaming were even worse.

When these processors were released, they were seen as an intermediate option. Intel released them with the promise of higher clock speeds in the future, which they eventually did with the Pentium 4. However, this lineup still had its share of problems. The most serious of these was Intel’s decision to use RDRAM instead of DDR SDRAM. Because of the complexity of manufacturing, RDRAM was more expensive than DDR SDRAM. This was such a serious problem that Intel actually shipped two RDRAM strips with every Pentium 4 processor in the box.

The problem wasn’t with enthusiasts building their own PCs, but with Intel’s partners who didn’t want to foot the bill for RDRAM when DDR SDRAM was cheaper and offered better performance. Intel ended up offering processors that not only beat the competition, but even the previous generation. And to make matters worse, they were more expensive because of the exotic memory interface.

And they were more expensive because of the exotic memory interface.

In the months that followed, Intel released Pentium 4 chips with higher clock speeds, as well as a normal DDR interface. The branding eventually turned into a success story with Hyper Threading and Extreme Edition, but for the first Pentium 4 processors released, the disappointment was real.

AMD E-240 (2011)

We’ve focused on desktop processors in this list, but AMD’s E-240 deserves a special mention for being truly awful. It’s a single-core mobile processor that runs at 1.5GHz. Based on that, you might think it came out in the 2000s — Intel released the first Core Duo for laptops in 2006, — but you’d be wrong. It came out in 2011. Around that time, even the weakest second-generation Intel Core i3 processor had two cores and four threads.

At that time, even the weakest second-generation Intel Core i3 processor had two cores and four threads.

The E-240 never had high expectations. It was released on AMD’s Brazos platform, which were low-power chips meant to compete with Intel’s Atom. Even so, the E-240 lagged behind the competition. The standard for Atom at the time of the E-240’s release was two cores, and even AMD’s more powerful variants in the line, such as the E-300 and E-450, had two cores. The E-240 was aimed at budget laptops, but even by those standards it was years out of date when it came out in 2011.

The E-240 was aimed at budget laptops, but even by those standards it was obsolete when it came out in 2011.

What’s worse, the chip was designed with a single-channel memory controller, further exacerbating the chip’s already unbearably slow performance. With only one core and no support for multiple threads, the E-240 was forced to take turns. This had a severe impact on performance, with the E-240 lagging behind the dual-core E-350 by 36 percent.

The fact that the processor is weak doesn’t make it one of the worst of all time. There are plenty of AMD and Intel variants built for low-cost laptops that can’t be called powerful, but the E-240 is particularly painful for the time it was released. It seems the main purpose of creating this chip was to trick unsuspecting buyers into buying silicon that’s three to four years out of date.

So it seems the main purpose of creating this chip was to trick unsuspecting buyers into buying silicon that’s three to four years out of date.

Intel Itanium (2001)

In today’s world, we all think of Intel as the champion of the x86 instruction set architecture (ISA). Intel developed x86, and with a wave of machines using the Arm instruction set, the company is waving its flag, proving that x86 is not dead. However, things weren’t always this way. At one time Intel wanted to kill its own child by developing a new ISA. The Intel Itanium architecture and the series of processors that came along with it was a joint venture between Intel and HP to develop a 64-bit address width ISA, and it was a colossal failure.

You’ve probably never heard of Itanium, and that’s because it never made its way into the mainstream market. When Itanium first appeared, it was talked about as a monumental shift in computing. It was Intel’s answer to PowerPC and its RISC instruction set, offering 64-bit ISA without sacrificing the performance of 32-bit applications. That’s what Intel and HP claimed. In reality, RISC’s competitors were much faster, and a year before the Itanium appeared in data centers, AMD released its x86-64 ISA — an x86 extension that could run 64-bit applications and is still in use today.

Another year before the Itanium appeared in data centers, AMD released its x86-64 ISA — an x86 extension that could run 64-bit applications and is still in use today.

Itanium was nevertheless very popular, so much so that Intel supported it for decades after 2001, when the processors were first introduced. HP and Intel announced their partnership in 1994, and by July 2001, when Itanium was released, major brands such as Compaq, IBM, Dell and Hitachi had signed up for the future envisioned by Intel and HP. Just a few years later, support for Itanium was all but phased out, and Intel copied AMD’s actions by developing its own x86-64 extension.

All support for Itanium has been virtually eliminated and Intel has copied AMD’s actions by developing its own x86-64 extension.

Although Intel never made its way into the mainstream market, it technically shipped Itanium chips until 2021. And in 2011, even after x86-64 established itself as the dominant ISA in the PC market, Intel reaffirmed support for Itanium. Itanium’s long lifecycle was not a mistake, however. In 2012, court documents made public as part of a dispute between Oracle and HP over Itanium processors showed that HP paid Intel $690 million to continue producing the chips from 2009 through 2017. HP paid Intel to keep Itanium on life support.

And it paid Intel to keep Itanium on life support.

We never saw mainstream PCs with Itanium processors, but the lineup is still one of the biggest failures in the entire computer industry. It was originally hailed as a revolution, but in the decades following the Itanium’s release, Intel kept abandoning the ISA scale until it finally came to naught.

At the end of the year, Intel’s Itanium lineup was the biggest failure in the entire PC industry.

![Outcomes 2025: the memory crisis, agent-based AI and the failure of ultra-thin smartphones + [bonus] ForGeeks Podcast Outcomes 2025: the memory crisis, agent-based AI and the failure of ultra-thin smartphones + [bonus] ForGeeks Podcast](https://forgeeks.pro/wp-content/uploads/2025/12/exkwmm9t33zyr1hfbeeq.avif)