Minecraft has become an arena for AI competition

As traditional artificial intelligence (AI) benchmarking methods prove ineffective, developers are turning to more creative approaches to evaluate the capabilities of generative models. One such approach has been Microsoft’s Minecraft, a game that serves as a sandbox for creativity and construction.

Minecraft, a Microsoft-owned game, is one such approach.

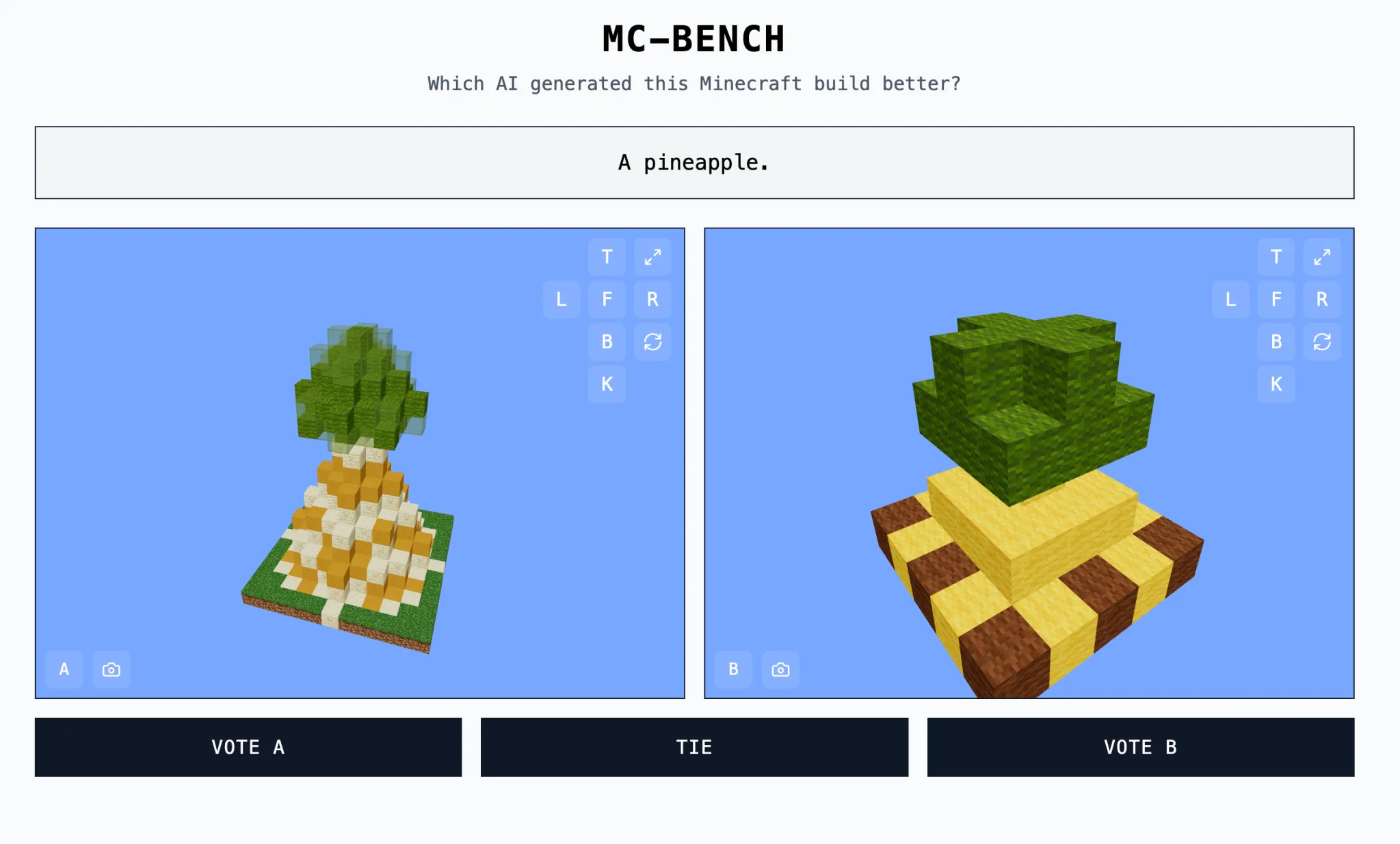

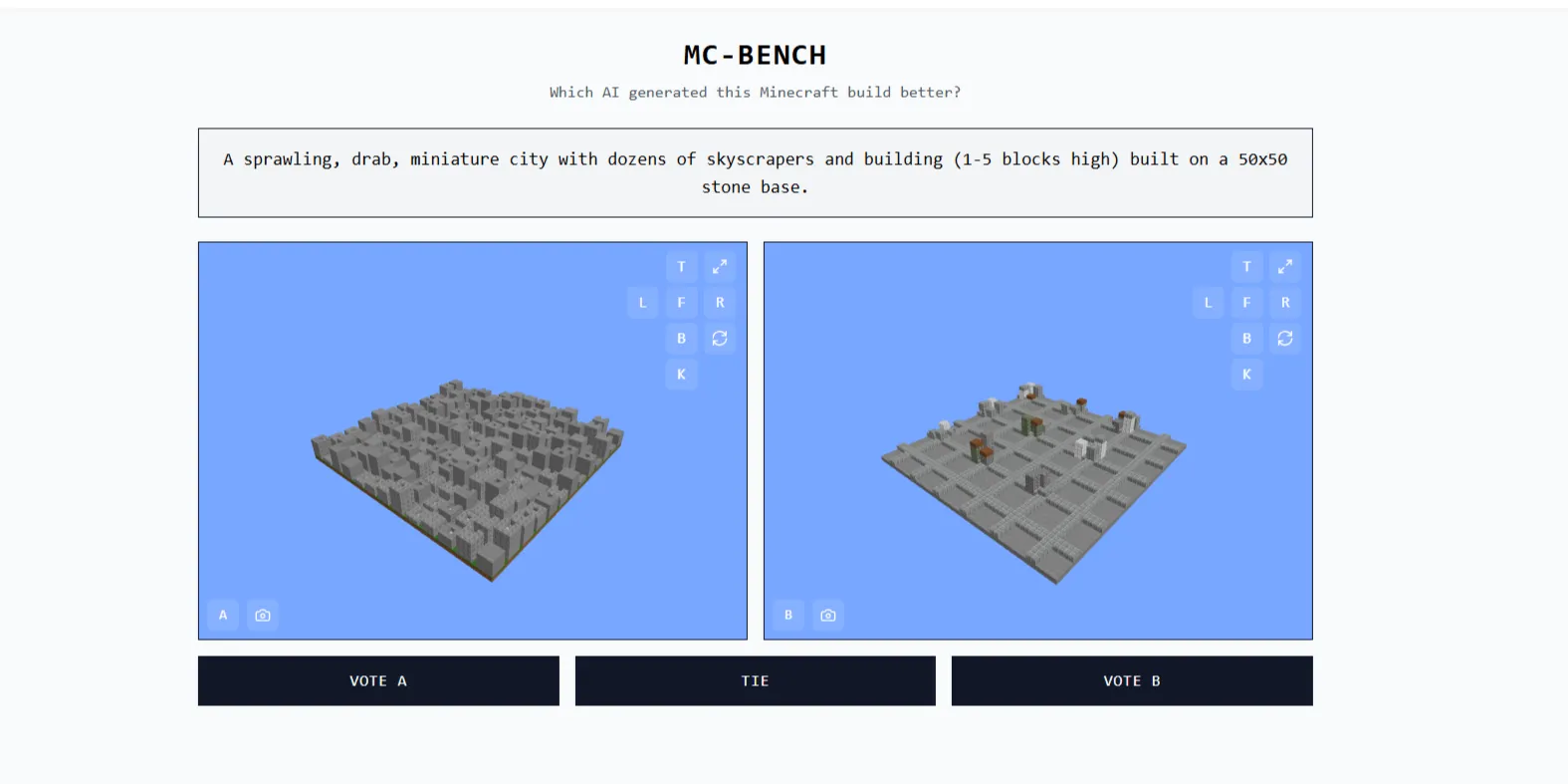

The site Minecraft Benchmark (or MC-Bench) was created to allow AI models to compete against each other in creation-based tests in Minecraft. Users can vote on which model did better, and only after voting are they revealed which AI created each build.

Minecraft Benchmark (or MC-Bench).

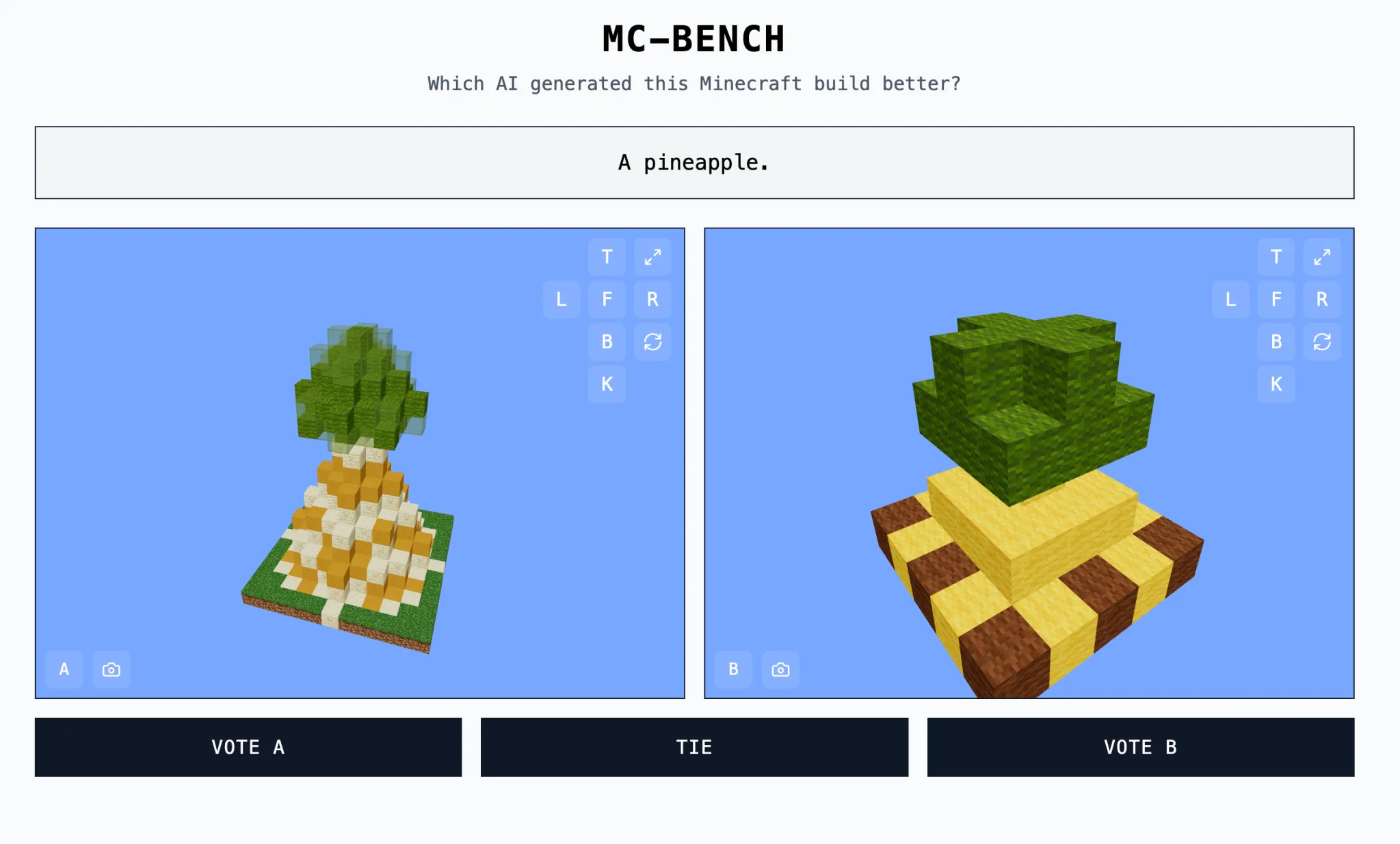

For Adi Singh, a 12th grader and founder of MC-Bench, the value of Minecraft lies not just in the game itself, but in the fact that it is familiar to many. It’s the best-selling video game of all time, and even those who haven’t played it can appreciate how well executed the blocky representation of, for example, a pineapple is.

And even those who haven’t played it can appreciate the blocky representation of, for example, a pineapple.

“Minecraft makes it much easier for people to see progress in AI development,” Singh noted in an interview with TechCrunch. “People have gotten used to Minecraft, its visual style and atmosphere.”

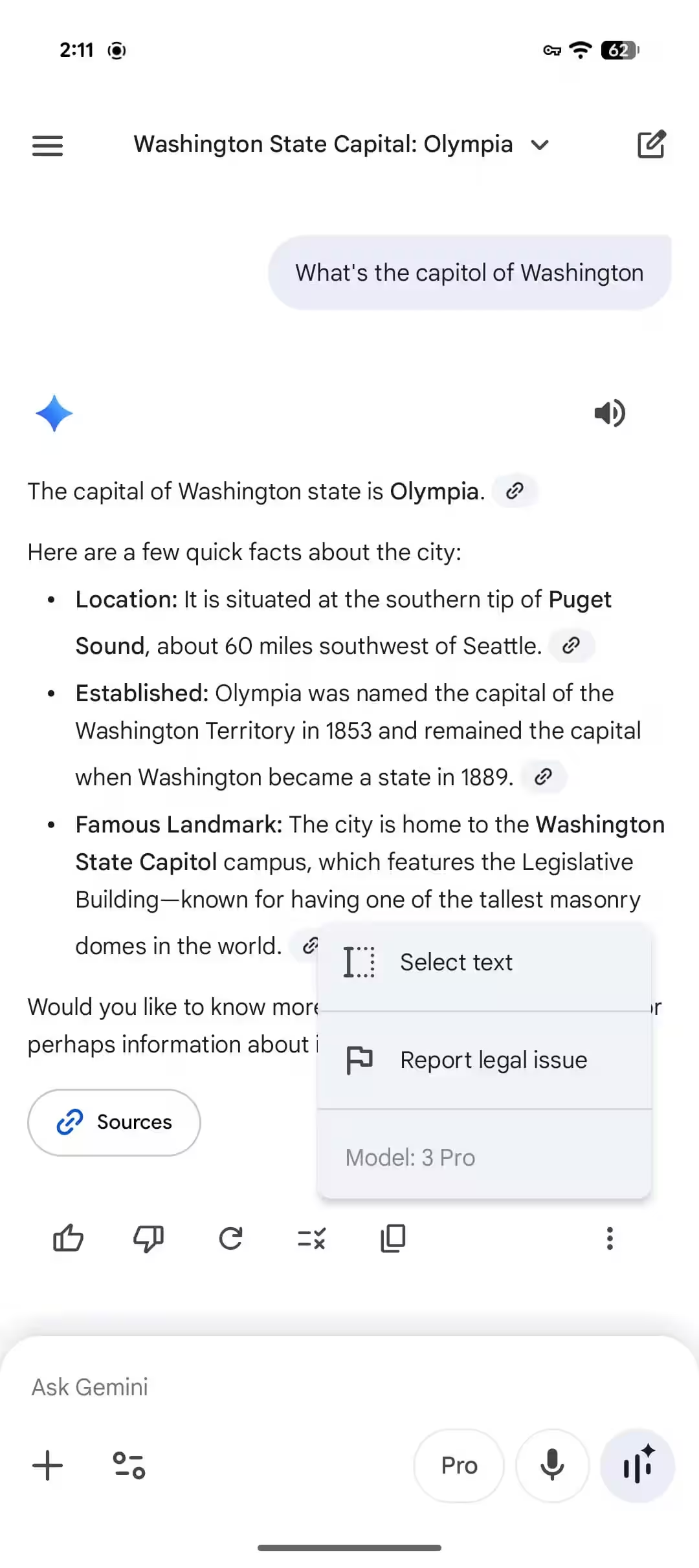

There are currently eight volunteers participating in the MC-Bench. Companies such as Anthropic, Google, OpenAI, and Alibaba have provided their products for testing, but they are not affiliated with each other or otherwise committed.

“Right now we are creating simple builds to assess how far we have come since the GPT-3 era, but we could expand the project to more complex tasks in the future,” Singh added. “Games can serve as a safe and controlled environment to test agent-based thinking, making them ideal for testing.”

“

Other games such as Pokémon Red, Street Fighter and Pictionary have also been used as experimental benchmarks for AI, as the art of AI testing is notoriously difficult.

Researchers often test AI models on standardized scores, but many of these tests give models a “home field” advantage. Because of the nature of their training, models can be strong in narrow problem-solving domains, especially those that require mechanical memorization or basic extrapolation.

Models can be strong in narrow problem-solving domains, especially those that require mechanical memorization or basic extrapolation.

Simply put, it’s hard to understand what it means that OpenAI’s GPT-4 can score in the 88th percentile on the LSAT but can’t count the number of “p’s” in the word “tractor.” Anthropic’s Claude 3.7 Sonnet was 62.3% accurate on a standardized software engineering test, but is inferior at playing Pokémon to most five-year-olds.

Anthropic’s Claude 3.7 Sonnet is the most accurate on the LSAT, but is inferior at playing Pokémon to most five-year-olds.

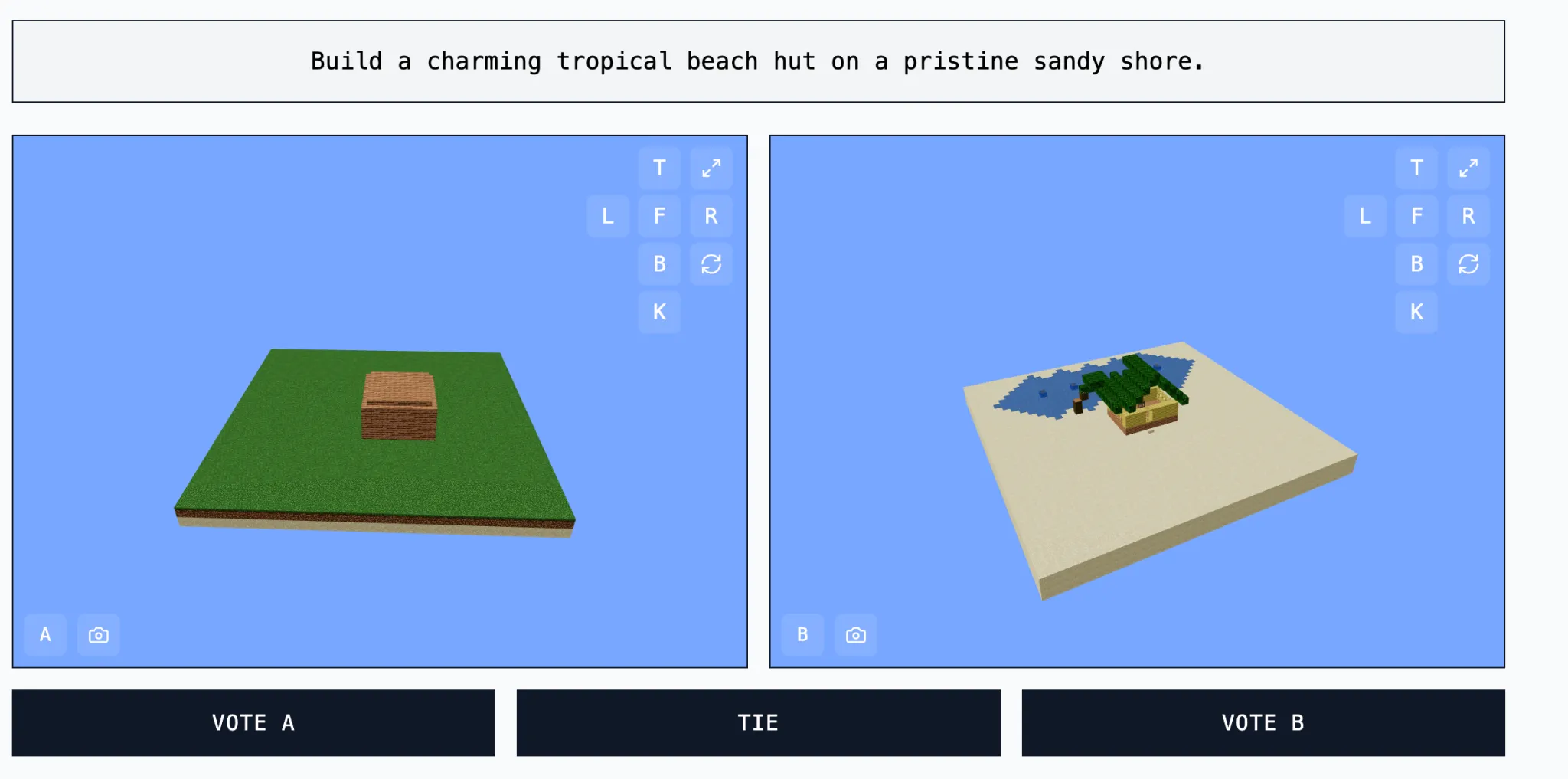

The MC-Bench is technically a programming test, as models must write code to create a suggested assembly, such as “Snowman” or “Charming tropical beach hut on a pristine sandy beach.”

But most MC-Bench users find it easier to evaluate whether a snowman looks better than delving into the code, which makes the project more appealing and allows them to gather more data on which models perform better.

The question of how useful these results are for AI remains, however. Singh believes they are an important indicator.

“The current leaderboard pretty accurately reflects my own experience with these models, which is different from many purely text-based tests,” Singh concluded. “Perhaps MC-Bench can be useful for companies to see if they are moving in the right direction.”