Alibaba released Marco-o1: the new big language model

Alibaba continues to set trends in artificial intelligence. Researchers recently unveiled a new language model, Marco-o1, which is capable of solving complex and open-ended problems beyond traditional learning approaches. This development builds on the success of OpenAI o1 and the concept of large reasoning models (LRMs), offering improved data processing and decision-making mechanisms.

Marco-o1 is a new language model that is capable of solving complex and open-ended problems beyond traditional learning approaches.

What makes Marco-o1 unique?”

.

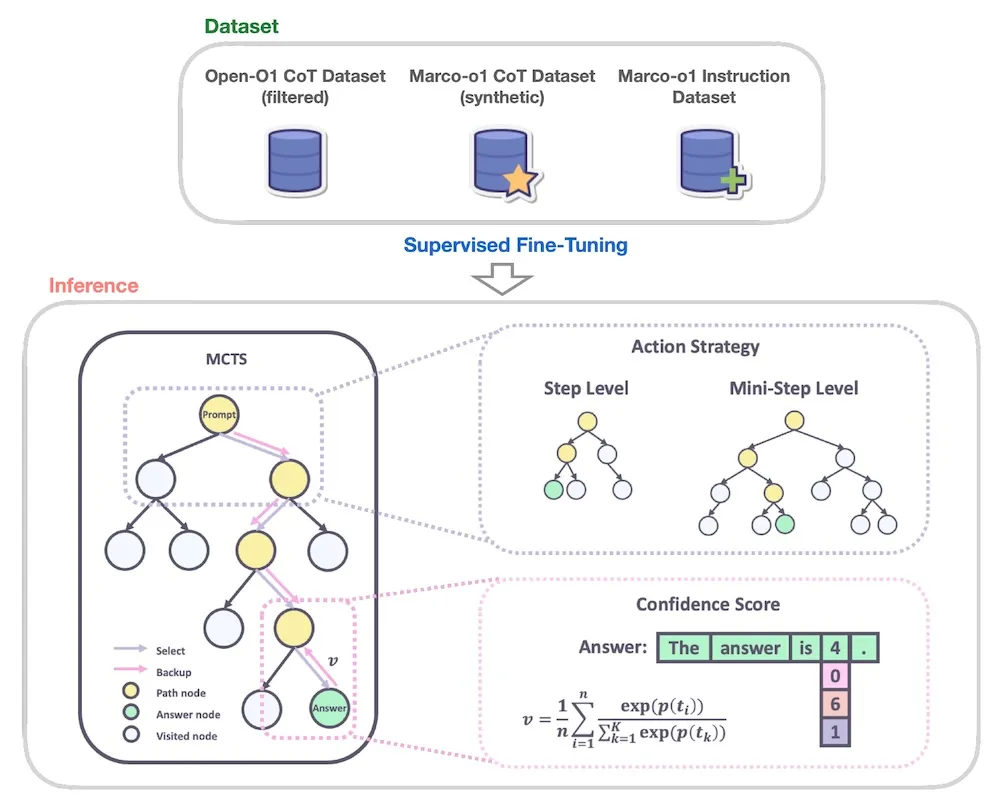

Marco-o1 is an enhanced version of the Qwen2-7B-Instruct model that integrates advanced techniques such as the Chain-of-Thought (CoT) method, Monte Carlo Tree Search (MCTS) algorithm, and innovative analysis strategies. These methods allow the model to tackle challenges where there are no clear standards or metrics for success.

These methods allow the model to address challenges where there are no clear standards or metrics for success.

Benefits of Monte Carlo Tree Search

MCTS is actively used in Marco-o1 to model and evaluate multiple decision options. This algorithm allows the model to generate a tree of possible answers given the confidence in each option. With this approach, Marco-o1 does not just answer questions, but also ponders several possible solutions, selecting the most appropriate one.

Researchers at Alibaba have also introduced the ability to customize the granularity of the MCTS. Users can set the number of tokens generated at each stage of the analysis, striking a balance between accuracy and computational cost.

All users can set the number of tokens generated at each stage of the analysis, allowing them to find a balance between accuracy and computational cost.

Built-in self-correction mechanism

One of the unique features of Marco-o1 is its reflection system. While analyzing data, the model periodically reminds itself to reconsider decisions with the phrase, “Wait! I may have made a mistake. I need to think again.” This approach helps to correct errors early on, making the results more accurate and reliable.

Marco-o1 is a reflexive system.

Test results: from math to idiom translation

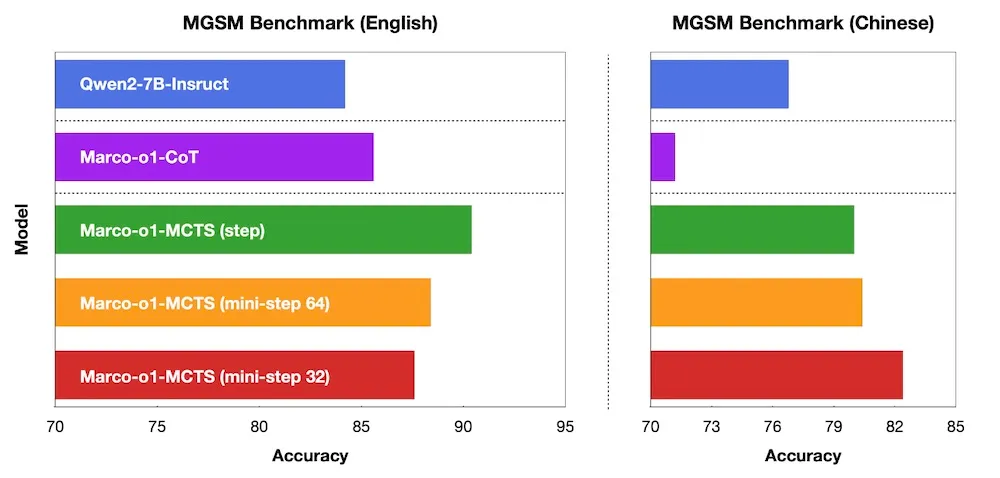

To verify the model’s capabilities, the researchers tested Marco-o1 on tasks of varying difficulty. On the MGSM test, which includes math problems for elementary school students, the model showed significant improvement over the base Qwen2-7B version, especially when using MCTS.

The model’s performance on the MGSM test, which includes math problems for elementary school students, showed significant improvement over the base Qwen2-7B version, especially when using MCTS.

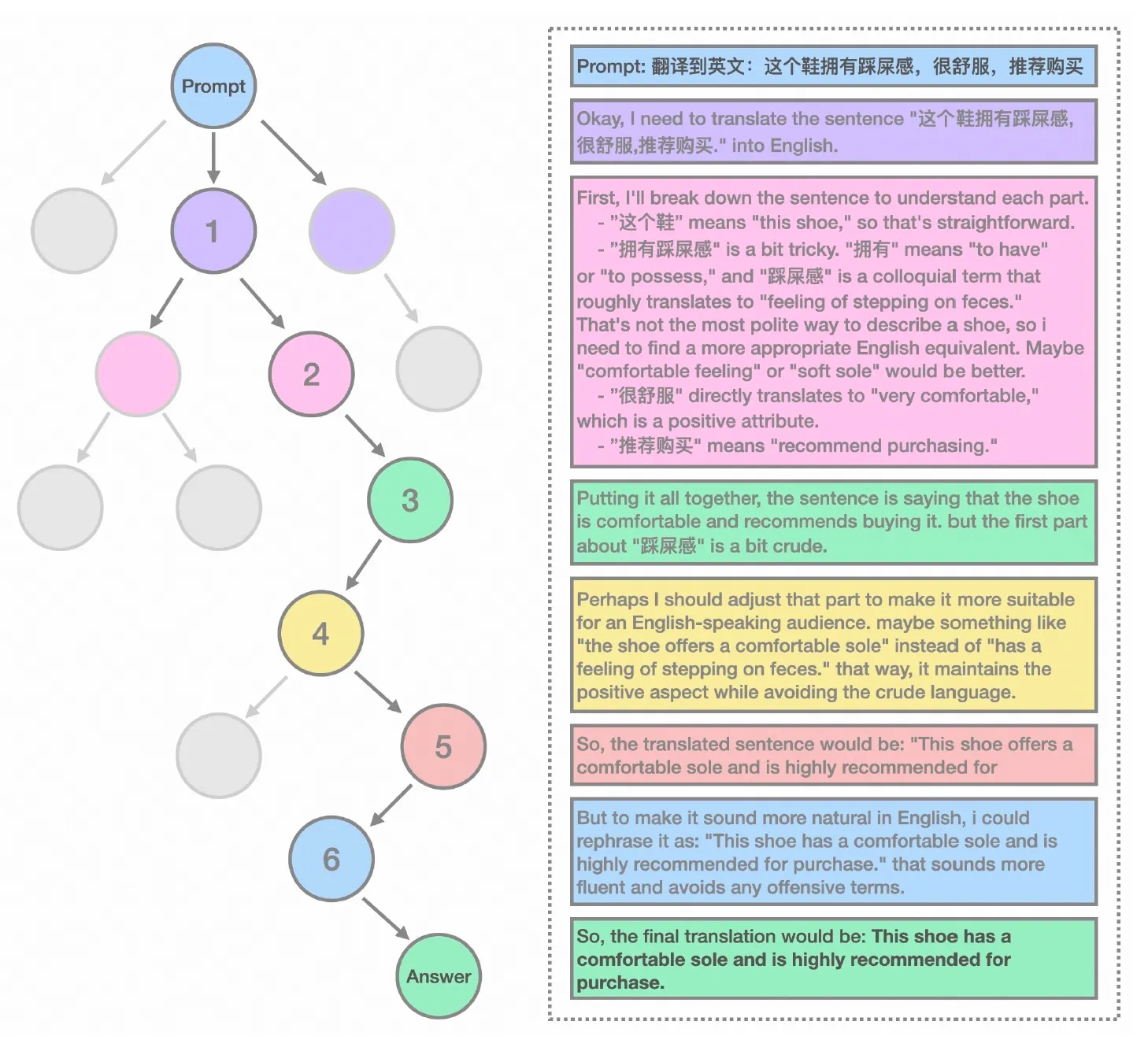

One of the most impressive features of Marco-o1 was the translation of complex idiomatic expressions and slang. For example, the model correctly interpreted the Chinese phrase “this shoe gives the feeling of stepping on something soft” as “this shoe has a comfortable sole” in English. To do this, Marco-o1 used a chain of thought, assessing cultural and linguistic context.

Marco-o1 used a chain of thought, assessing cultural and linguistic context.

Marco-o1 and the future of reasoning models

Marco-o1 is already available to researchers on the Hugging Face platform along with a partially open dataset. The move underscores Alibaba’s commitment to supporting innovation and development in the artificial intelligence community.

The move underscores Alibaba’s commitment to supporting innovation and development in the artificial intelligence community.

New era of competition in LRM development

Marco-o1 isn’t the only model to emerge from the OpenAI o1 inspiration. Last week, China’s DeepSeek Labs unveiled its R1-Lite-Preview competitor, and researchers from universities in China released LLaVA-o1, a model for image and text processing using a temporal scaling inference approach. These models continue to build on the ideas laid out by OpenAI and demonstrate how temporal scaling can change the approach to AI training.

These models continue to build on the ideas laid out by OpenAI and demonstrate how temporal scaling can change the approach to AI training.