DeepSeek releases the V3.1 model: what’s new?

Chinese company DeepSeek has unveiled an updated version of its flagship LLM, DeepSeek V3.1. The main innovations include expanded context to 128,000 tokens and an increase in the number of parameters to 685 billion.

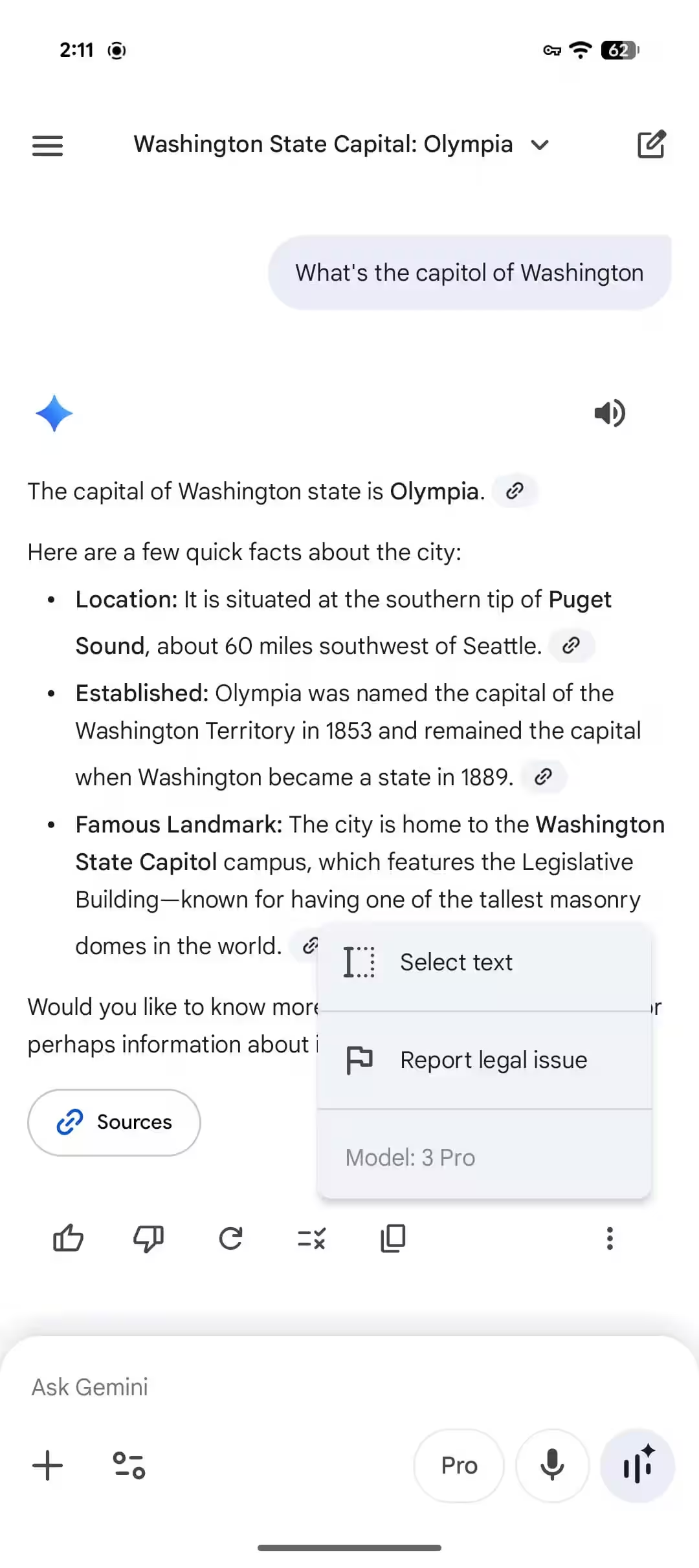

What’s changed in V3.1: Context now equals an entire book of 300-400 pages, giving improvements for analyzing long documents, generating large texts and multi-topic dialogs. The Mixture-of-Experts (MoE) architecture remains: only 37 billion parameters are active during token processing. Support for BF16, FP8 and F32 formats provides flexibility for different deployment environments. The model is available through the API and Hugging Face under an MIT open-source license.

Test results showed 71.6% on the Aider coding test – higher than Claude Opus 4, making V3.1 one of the strongest open-source LLMs for programming. Skills in math and logic have strengthened, but users note no noticeable growth in “reasoning” compared to last year’s R1-0528.

The DeepSeek interface no longer references the R1 series. V3.1 combines conventional and “reasoning” tasks in a single hybrid architecture.

Training the original V3 cost approximately $5.6 million dollars (2.8 million GPU hours on an Nvidia H800). An attempt to train R2 on Huawei Ascend chips failed due to compatibility and performance issues. DeepSeek ended up using a hybrid scheme: training on Nvidia, inference on Ascend. This has complicated development and delayed the release of R2. Founder Liang Wenfeng has reportedly expressed displeasure at the delay.

Another reason for the delay is that DeepSeek’s development has been delayed.

Alibaba, with Qwen3, outpaced DeepSeek in terms of speed of adoption of similar solutions. The situation has highlighted China’s limited semiconductor base and the difficulties of startups balancing politics and technology.