YouTube struggles with using AI to spoof content

Tuesday was a big day for content creators on YouTube: the company announced the full launch of its artificial intelligence-assisted similarity detection feature for members of its partner program. This new technology, already tested in a pilot project, allows creators to request the removal of AI-generated content that uses their face or voice without permission.

A new technology, already tested in a pilot project, allows creators to request the removal of AI-generated content that uses their face or voice without permission.

A YouTube spokesperson told TechCrunch that this is the first wave of rollout, and creators eligible to use the feature have already been notified via email.

The AI-assisted content tracking technology identifies content that uses a similar image of the creator, which helps combat the misuse of an image or voice – for example, to advertise without consent or spread misinformation. An example is the case of Elecrow, which used AI to mimic the voice of YouTube blogger Jeff Girling to promote its products.

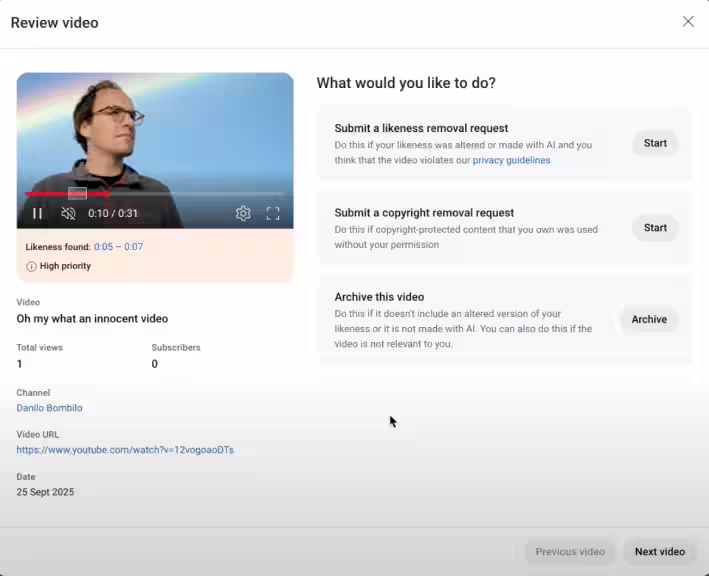

On its Creator Insider channel, YouTube has published instructions on how to use this system. To get started, you’ll need to go to the “Similarity” tab, consent to data processing, and scan a QR code via your smartphone – which will redirect to a webpage for identity verification, where you’ll need to upload a photo ID and a short video selfie. Once access is granted, creators will be able to view the found videos and submit requests for their removal or copyright infringement complaints. There is also an option to archive identified videos.

Creators can opt out of using the technology at any time, and searches through the system will stop within 24 hours of the opt-out.

This innovation is important to combat the unfair use of AI in video content creation, especially given recent cases of imitating voices and faces for the purpose of fraud or spreading fakes. YouTube remains committed to countering misinformation and protecting creators’ rights.