Pruna AI has made open source its framework for compressing AI models

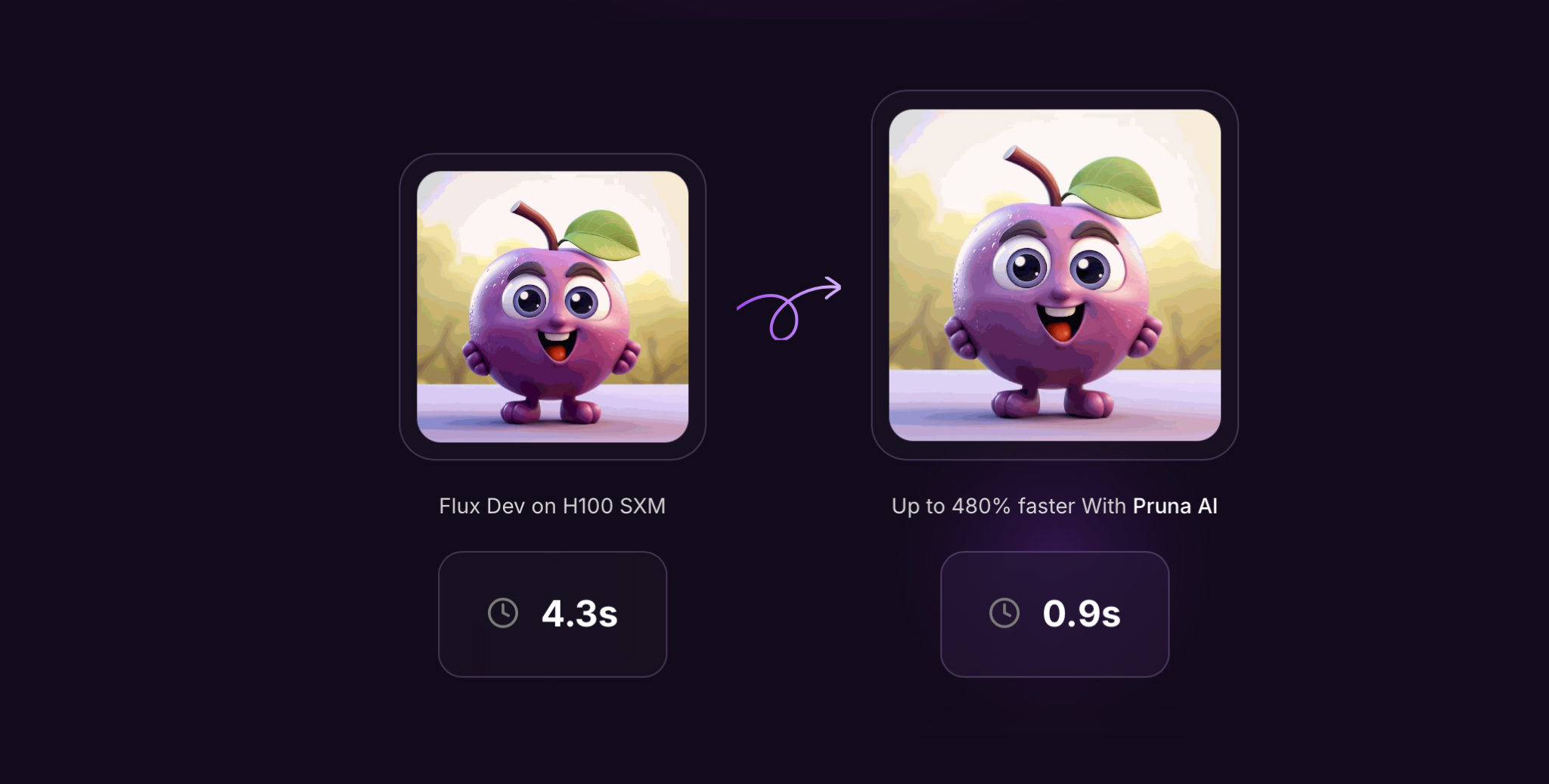

European startup Pruna AI, which develops algorithms for compressing AI models, announced that it will make its framework open source on Thursday. The tool allows optimization of neural networks through caching, pruning, quantization and distillation techniques.

How does the Pruna AI framework work?

The Pruna AI framework allows developers to automate model compression, combine different optimization methods and evaluate their impact on model quality.

“If you draw an analogy, we’re doing for compression methods what Hugging Face did for Transformers and diffusion models – standardize invocation, persistence, and loading,” explains Pruna AI co-founder and CTO John Rachwan in an interview with TechCrunch.

Why it matters

Large AI labs like OpenAI are already using distillation to accelerate their models. For example, the GPT-4 Turbo is a simplified and faster version of the GPT-4, and the Flux.1-schnell model was created as a distilled version of Black Forest Labs’ Flux.1.

The distillation method allows extracting knowledge from a large model through a «teacher-student» mechanism. Developers send queries to a powerful model, analyze its responses, and train a more compact neural network that approximates the behavior of the original.

“Usually big companies build these tools inside their ecosystem, and in the open-source environment you only find standalone methods like quantization for LLMs or caching for diffusion models,” Rachwan says. – “Pruna AI brings them all together, making the process simple and easy to use.”

Who can use this?

Although Pruna AI supports different types of models (LLM, diffusion models, speech recognition, computer vision), the main focus right now is optimization of generative models for images and video.

The startup’s customers already include Scenario and PhotoRoom.

Besides the free version, Pruna AI offers a corporate solution with a optimization agent that automatically selects the best compression parameters.

“You simply upload a model and specify that you want to increase speed but not lose more than 2% in accuracy. The agent then selects the optimal combination of compression methods,” explains Rachwan.

“

Business Model and Investment

Pruna AI uses hourly billing in the Pro version, similar to renting GPUs in cloud services.

The developers believe that optimizing AI models can reduce computational costs. For example, they were able to reduce the Llama model by a factor of 8, with virtually no loss in quality.

So far, the developers are confident that optimizing AI models can reduce computational costs.

Pruna AI recently raised $6.5 million in a seed round. Investors include:

- EQT Ventures,

- Daphni,

- Motier Ventures,

- Kima Ventures.

The open source framework will become available as early as this Thursday.