Apple is designing robots with AI and Siri support

Rumors about Apple’s robots have gotten new confirmation. Bloomberg reports that several home robot projects are being worked on in parallel in Cupertino.

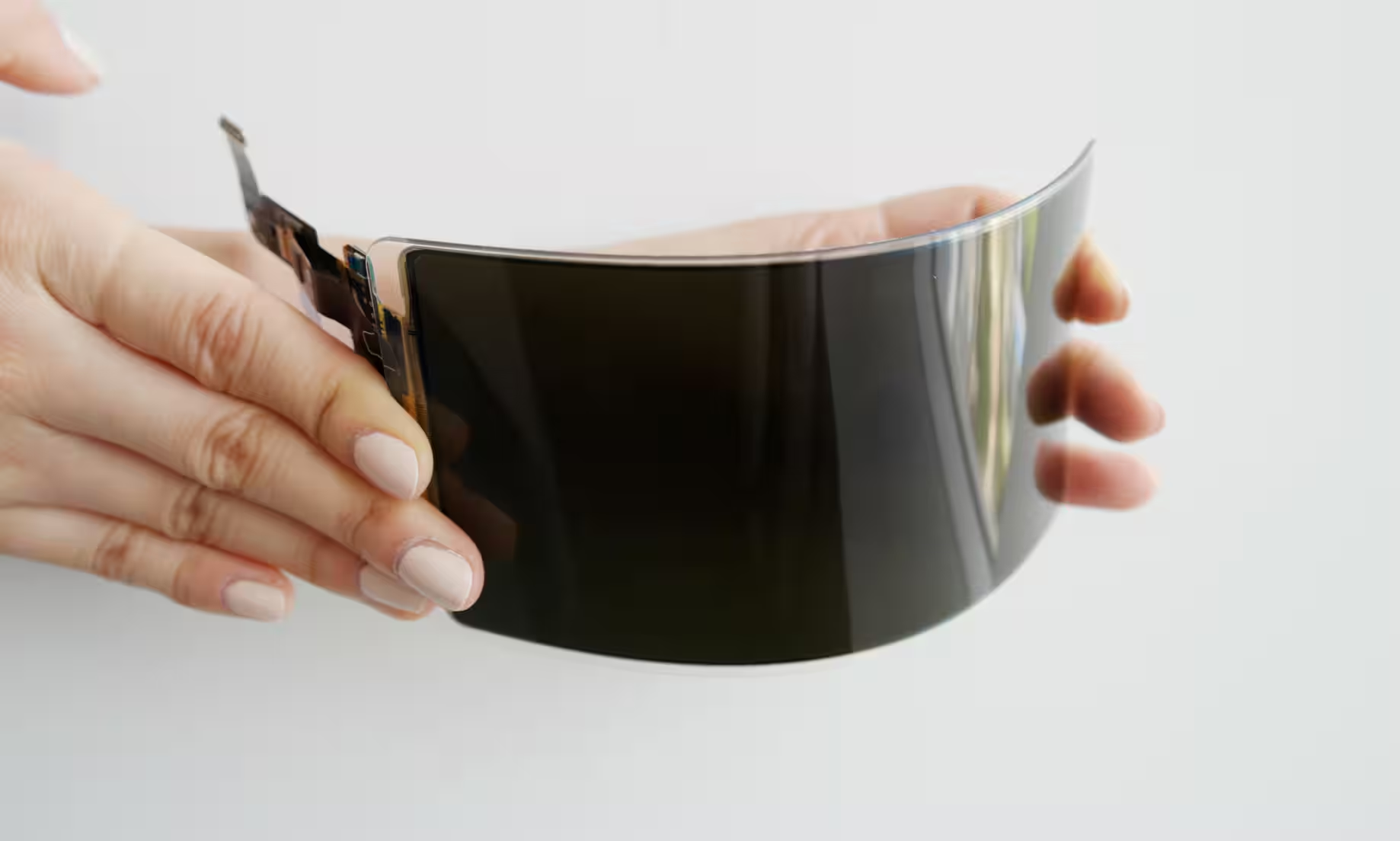

The main one is an iPad-like device on a robotic arm that will be able to respond naturally to humans, turn to the person speaking and track the person on FaceTime calls by keeping them in the frame.

An iPad-like device on a robotic arm that will be able to respond naturally to humans, turn to the person speaking, and track the person on the phone while holding the person in the frame.

A new version of Siri with improved AI capabilities will play a key role. It will be able to engage in more natural dialog and even unobtrusively suggest options during a conversation. For example, when two people are discussing dinner, Siri can suggest a restaurant.

There are reports that Apple may use the recognizable Finder emoji from macOS in the robot’s design to give the device a “presence” and more humanity.

And there are reports that Apple may use the recognizable Finder emoji from macOS in the design of the robot.

According to insiders, other formats are being discussed in parallel, from a robot on wheels (analogous to Amazon’s Astro) to a full-fledged humanoid robot. And for industry, Apple is developing a large manipulator, codenamed T1333, aimed at manufacturing.

And Apple is developing a large manipulator, codenamed T1333, aimed at manufacturing.

The projects are being overseen by Kevin Lynch, who was previously involved with watchOS and Apple’s automotive initiatives. However, as the source reminds us, not all of Apple’s research makes it to release – the canceled Project Titan remains an example. Nevertheless, it’s the iPad with a robotic arm that looks the most promising and closest to a real launch.