New study shows OpenAI models ‘memorize’ copyrighted content

A new study confirms that OpenAI likely used copyrighted content to train some of its artificial intelligence models.

The company faces lawsuits from authors, programmers and other copyright holders who accuse OpenAI of using their works – books, code, etc. – to create its models without authorization. OpenAI claims it is acting under fair use, but the plaintiffs insist that there is no exception in U.S. copyright law for data used for training.

OpenAI claims it is acting under fair use, but the plaintiffs insist there is no exception in U.S. copyright law for data used for training.

The study was conducted by researchers from the University of Washington, the University of Copenhagen and Stanford. They developed a new method to identify the data that models memorized during training.

AI models work as predictive engines: by learning from large amounts of data, they identify patterns and can generate text or images. While most of their outputs are not exact copies of the training material, some fragments can still be reproduced. For example, image models can reproduce movie stills, and language models can copy news articles.

An image model can reproduce movie stills, and language models can copy news articles.

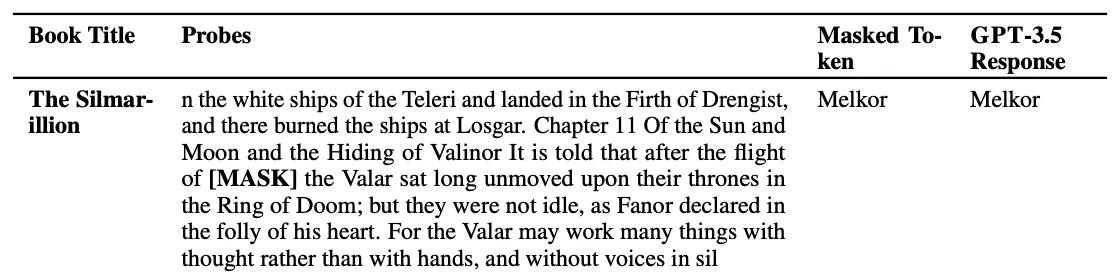

The study used so-called “high-surprise” words – those words that stand out as unusual in the context of the text. For example, the word “radar” in the sentence “Jack and I sat perfectly still while the radar buzzed” is considered highly surprising compared to more common words.

The researchers tested several OpenAI models, including GPT-4 and GPT-3.5. They removed highly surprising words from the text of fiction books and New York Times articles and asked the models to guess the disguised words. If the models guessed correctly, it indicated that they had memorized the relevant fragment during training.

The models were then asked to guess correctly, indicating that they had memorized the relevant fragment during training.

Results showed that GPT-4 memorized parts of popular fiction books and New York Times articles. Abhilasha Ravichander of the University of Washington noted the importance of data transparency in building robust language models.

OpenAI is advocating for more flexible rules on the use of protected content for model training. While the company has licenses for certain materials and opt-out mechanisms for copyright holders, it is also lobbying governments for clearer fair use rules in the AI field.