Google’s new Gemini 2.5 allows AI to interact with websites in the same way as humans

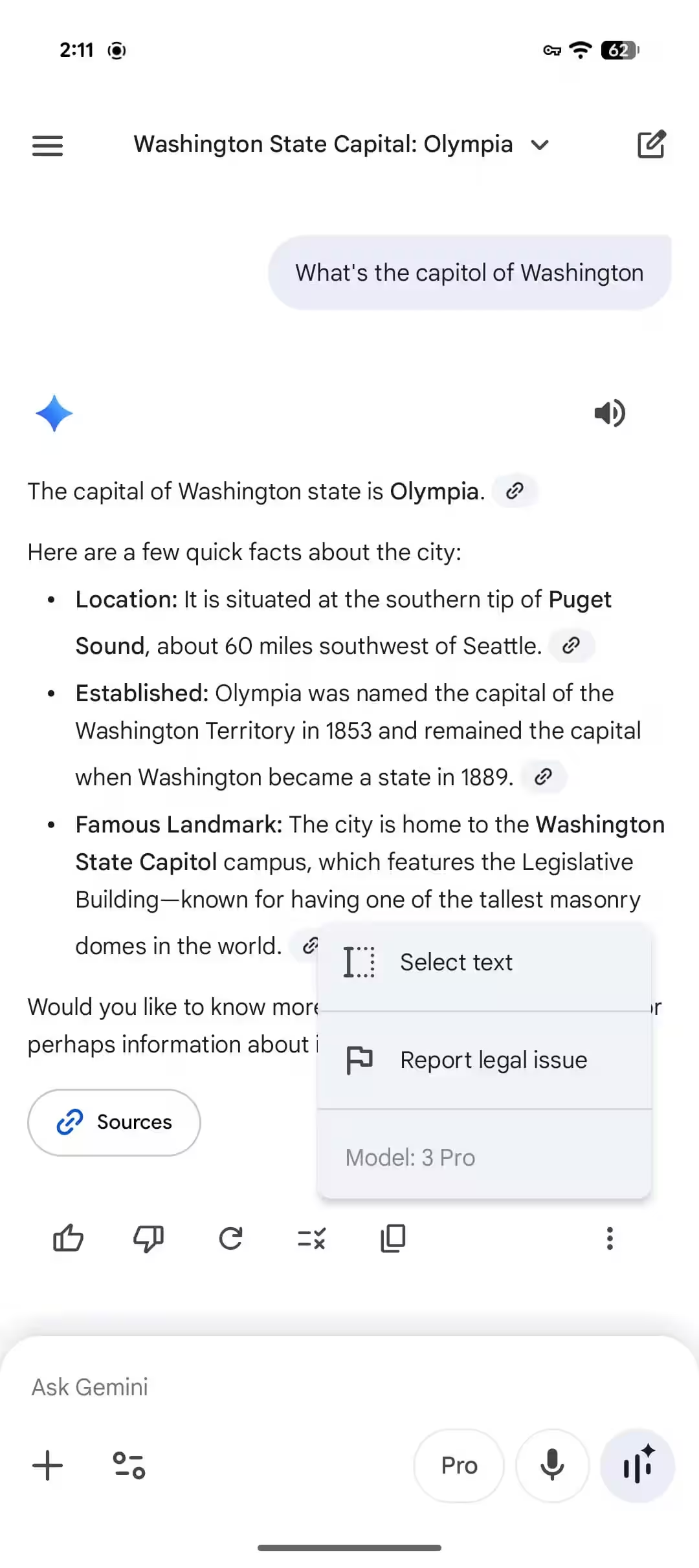

Google has released a new artificial intelligence model called Gemini 2.5 Computer Use. This model allows artificial intelligence agents to interact with websites and user interfaces just like a human does. It is currently available in open evaluation mode through the Gemini API, which is provided in Google AI Studio and Vertex AI.

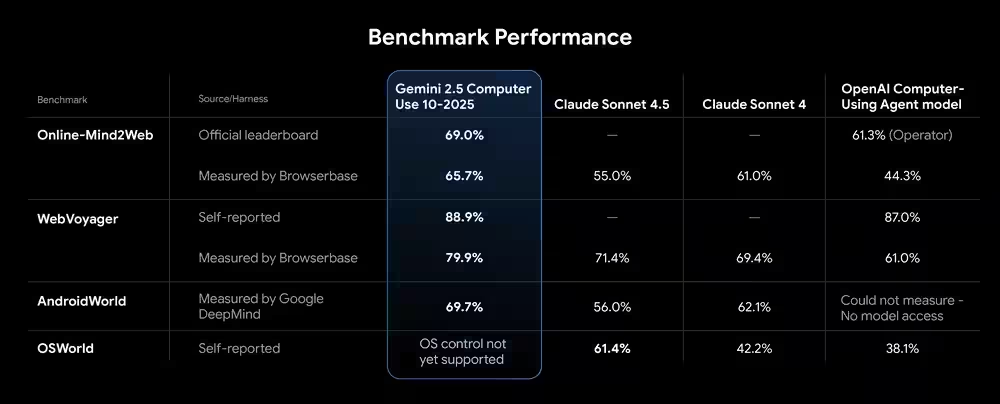

The Gemini 2.5 Computer Use model builds on the visual perception and reasoning capabilities inherited from the Gemini 2.5 Pro model. It is capable of performing a wide range of browser actions such as keystrokes, text entry, scrolling pages, moving the cursor, opening drop-down lists, and navigating Internet addresses. Google says it performs better in several comparison tests such as Online-Mind2Web, WebVoyager, and AndroidWorld, while delivering lower latency when executing commands.

In contrast to traditional artificial intelligence models that utilize an application programming interface, Gemini 2.5 Computer Use works by processing screen shots of web interfaces and generating specific user actions to execute them. The process works as follows: the agent receives a task request, the current screenshot, and a history of previously performed actions. It then analyzes the interface, selects a suitable action, such as clicking a button or entering text, and executes it on the client side. A new screenshot is then sent to continue the task in a loop.

The Gemini 2.5 computer model demonstrated its effectiveness with a variety of examples. For example, an agent was sorting stickers on a virtual whiteboard and transferring pet information from one website to a customer relationship management system. These demonstrations were accelerated and showed the system working in real time.

The Gemini 2.5 model was accelerated and showed the system working in real time.

The model currently supports performing thirteen different actions and works best in web browsers. Google noted that the model is not yet fully optimized to perform tasks on desktop operating systems, though it is already showing potential for use on mobile devices.

The company has also implemented security measures to prevent possible abuse. Before each action is performed, it undergoes a security check. Developers can restrict the execution of certain commands or require explicit user confirmation, especially for high-risk operations such as financial transactions.

The company has also implemented security measures to prevent potential abuse.

Some internal teams at Google are already using this model to test user interfaces and automate workflows on platforms such as the search engine and Firebase software development system. External developers participating in the Early Access Program are using it to build automation tools and helpers for workflow tasks.

Some internal Google teams are already using it to test user interfaces and automate workflows on platforms such as search and Firebase software development.

To start using the model, developers can turn to Google AI Studio or Vertex AI. Google also provides a demo environment through Browserbase for testing and experimentation.